Bug #36725

closedluminous: Apparent Memory Leak in OSD

0%

Description

Since last update (late October), been experiencing apparent memory leak in OSD process on two ceph servers in small business environment.

Debian stretch, kernel 4.9.0-8-amd64, luminous with Bluestore.

Two servers, each with two OSD daemons - 8 TB storage each (2x4TB) and 8GB RAM.

memory use on OSD process has been observed to grow at about 100MB per hour per OSD; have been rebooting servers when each OSD process approaches 50% of physical memory. After reboot they return to about 10% use each, and begin growing again.

Since no one else has reported, I believe likely to to my configuration, but both systems have been extremely stable up to this point.

Maybe related to my non-optimal replicas? (triple replicas on two servers)

Files

Updated by John Jaser over 5 years ago

Note: Downgrading both OSD servers to v12.2.8 returned memory usage to normal.

Updated by Nathan Cutler over 5 years ago

- Priority changed from Normal to Urgent

raising priority since this might be a regression in 12.2.9

Updated by Greg Farnum over 5 years ago

- Project changed from Ceph to RADOS

- Category deleted (

OSD) - Component(RADOS) OSD added

Updated by Sage Weil over 5 years ago

- Subject changed from Apparent Memory Leak in OSD to luminous: Apparent Memory Leak in OSD

- Status changed from New to Need More Info

can you dump the mempools (ceph daemon osd.NNN dump_mempools) several times over the growht of the process so we can see what is consuming the memory?

Updated by John Jaser over 5 years ago

- File 2.0G_free.txt 2.0G_free.txt added

- File 3.1G_free.txt 3.1G_free.txt added

- File 4.5G_free.txt 4.5G_free.txt added

Upgraded one OSD server to 12.2.9. Clean reboot. Generating hourly report on memory and mempools. Three examples attached.

Updated by John Jaser over 5 years ago

Upgraded one OSD server to 12.2.10: Same symptom observed. See attached. Two OSD daemons use up all physical memory in about 25 hours. 12.2.8 runs stable.

Updated by Konstantin Shalygin over 5 years ago

John, you are you in course about new 12.2.9 options osd_memory_target and bluestore_cache_autotune?

You should try to disable autotune or lower memory_target, I don't know exactly how it works together, documentation still unclear.

Updated by John Jaser over 5 years ago

Konstantin: thanks for pointing that out. that looks like the issue. Both OSD servers have 8GB RAM total, each running two OSD daemons. So the default osd_memory_target setting of 4294967296 won't allow any overhead for OS RAM. (sort of breaks the 1GB RAM per TB storage rule of thumb for my setup).

I changed setting to osd_memory_target = 2684354560

After 55 hours uptime, free memory is about 2.1G which is just slightly over target, and looks stable.

Thanks to all.

Updated by Greg Farnum over 5 years ago

- Status changed from Need More Info to Closed

Updated by Konstantin Shalygin over 5 years ago

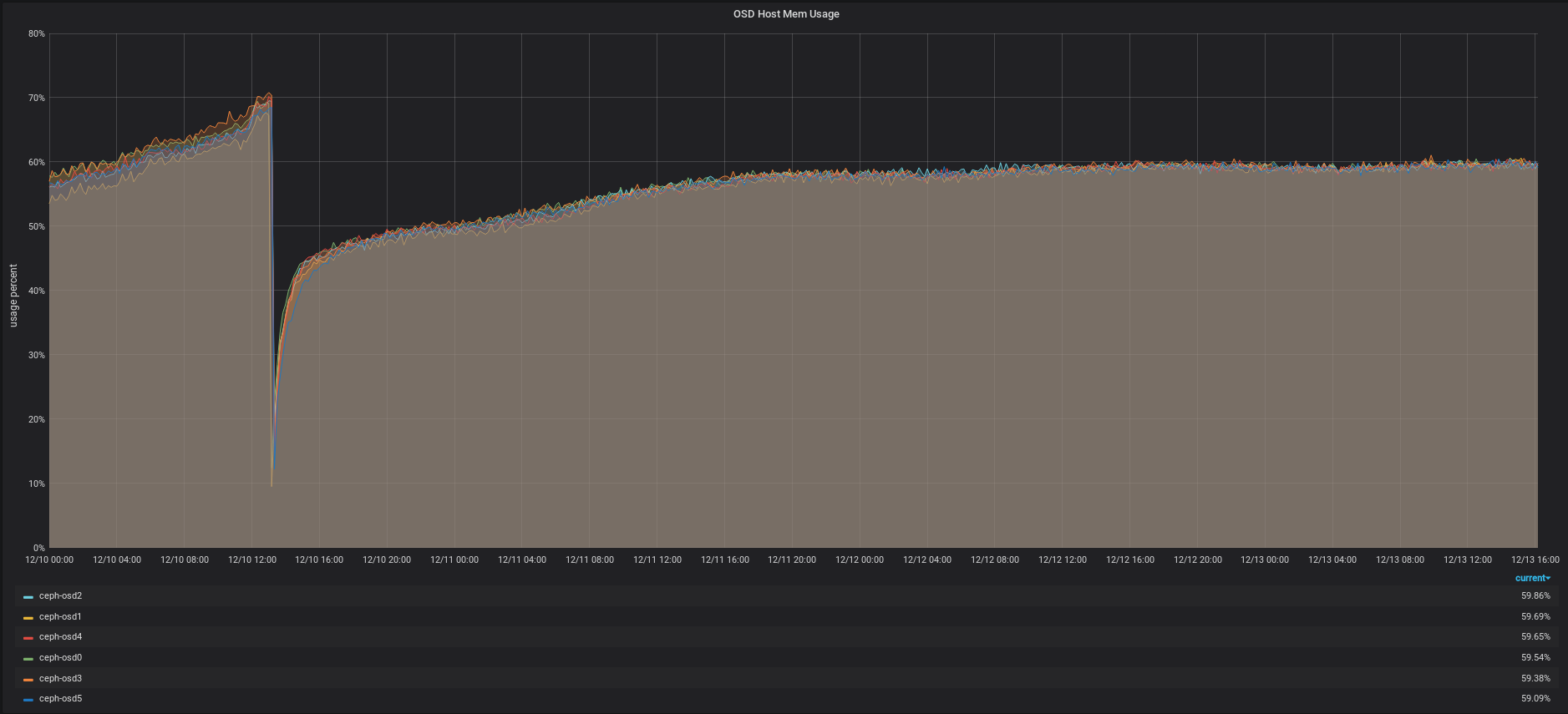

- File Selection_001.png Selection_001.png added

I made dumps during the tune of the osd_memory_target value. Perhaps this data will be useful in the future.

ceph-post-file: 97de181d-f5f9-44cd-8798-877a803df70f

I set osd_memory_target to 3GB, memory consumption is about 7-9% more than the default Bluestore settings in 12.2.8.