Feature #63889

openOSD (trim_maps): Missing periodic MOSDMap updates from monitor

0%

Description

OSDMap trimming lower bound is originated from the monitor's `osdmap_first_committed` epoch.

This value is shared from the monitor to the OSDs through OSDMap messages (MOSDMaps).

In the scenario where there is no OSDMap related activity, the monitor may have new `osdmap_first_committed` epoch without informing the OSDs about it.

As a result the OSDSuperblock::cluster_osdmap_trim_lower_bound (mon's `osdmap_first_committed`)

will remain "stale" until the next MOSDMap shared from the monitor. Since the tlb is stale, trim_maps calls won't actually trim any maps.

To resolve this scenario, we can enhance the monitor's behavior to share a MOSDMap with the new cluster_osdmap_trim_lower_bound

regardless of any MOSDMap activity.

ref-PR: (osd: fix scheduling of OSD::trim_maps is not timely) https://github.com/ceph/ceph/pull/54686

Problem Description

1. ceph -s check HEALTH_OK, and all pg are active+lean

2. ceph report | grep osdmap_, check the latest osdmap epoch is 25307, the oldest osdmap epoch is 24807, the difference between the two is 500 osdmaps

3. ceph tell osd.0 status, view

- oldest_map is 21594

- superblock.cluster_osdmap_trim_lower_bound=21594, should be 24807, not as expected

- newest_map is 25307

- The difference of `3714` osdmap is not as expected

4. ceph -s --debug_ms=1 > aa 2>&1 show that cluster_osdmap_trim_lower_bound = 24807 of osd_map

[root@zjw-cmain-dev build]# ceph -s

cluster:

id: 713551e4-fec5-453b-ac9e-ae0716d235cb

health: HEALTH_OK

services:

mon: 1 daemons, quorum a (age 5d)

mgr: x(active, since 5d)

osd: 2 osds: 2 up (since 2d), 2 in (since 3d)

flags noout,nobackfill,norebalance,norecover,noscrub,nodeep-scrub

data:

pools: 2 pools, 256 pgs

objects: 10.26k objects, 10 GiB

usage: 10 GiB used, 9.2 TiB / 9.2 TiB avail

pgs: 256 active+clean

[root@zjw-cmain-dev build]# ceph osd dump | head

epoch 25307

[root@zjw-cmain-dev build]# ceph report | grep osdmap_

report 974124877

"osdmap_clean_epochs": {

"osdmap_first_committed": 24807,

"osdmap_last_committed": 25307,

25307 - 24807 = 500 epoch osdmap

[root@zjw-cmain-dev build]# ceph report | jq .osdmap_clean_epochs

report 1902116976

{

"min_last_epoch_clean": 25306,

"last_epoch_clean": {

"per_pool": [

{

"poolid": 1,

"floor": 25307

},

{

"poolid": 2,

"floor": 25306

}

]

},

"osd_epochs": [

{

"id": 0,

"epoch": 25307

},

{

"id": 1,

"epoch": 25307

}

]

}

```

osd.0.log

```

[root@zjw-cmain-dev build]# tail -n 1000000 out/osd.0.log |grep trim_maps | tail -n 10

2023-12-25T15:23:02.055+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:24:04.020+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:25:05.060+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:26:06.941+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:27:08.033+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:28:09.170+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:29:11.127+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:30:12.387+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:31:14.312+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T15:32:16.066+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

```

```

[root@zjw-cmain-dev build]# ceph tell osd.0 status

{

"cluster_fsid": "713551e4-fec5-453b-ac9e-ae0716d235cb",

"osd_fsid": "bc068df8-0acb-4164-8262-3813b40ce736",

"whoami": 0,

"state": "active",

"maps": "[21594~3714]",

"cluster_osdmap_trim_lower_bound": 21594,

"num_pgs": 128

}

[root@zjw-cmain-dev build]# ceph tell osd.1 status

{

"cluster_fsid": "713551e4-fec5-453b-ac9e-ae0716d235cb",

"osd_fsid": "a62eb046-59cb-40f7-bf63-82c228ef5e30",

"whoami": 1,

"state": "active",

"maps": "[21594~3714]",

"cluster_osdmap_trim_lower_bound": 21594,

"num_pgs": 128

}

```

```

[root@zjw-cmain-dev build]# ceph -s --debug_ms=1 > aa 2>&1

[root@zjw-cmain-dev build]# grep osdmap aa

2023-12-25T16:10:19.628+0800 7ffb2a3fc700 1 -- 127.0.0.1:0/810641284 --> v2:127.0.0.1:40815/0 -- mon_subscribe({osdmap=0}) v3 -- 0x7ffb24111330 con 0x7ffb240fef90

[root@zjw-cmain-dev build]# grep osd_map aa

2023-12-25T16:10:19.630+0800 7ffb20ff9700 1 -- 127.0.0.1:0/810641284 <== mon.0 v2:127.0.0.1:40815/0 5 ==== osd_map(25307..25307 src has 24807..25307) v4 ==== 2651+0+0 (crc 0 0 0) 0x7ffb14032bb0 con 0x7ffb240fef90

Monitor::handle_subscribe

OSDMonitor::check_osdmap_sub

sub->session->con->send_message(build_latest_full(sub->session->con_features));

OSDMonitor::build_latest_full

class MOSDMap final : public Message {

void print(std::ostream& out) const override {

out << "osd_map(" << get_first() << ".." << get_last();

if (cluster_osdmap_trim_lower_bound || newest_map)

out << " src has " << cluster_osdmap_trim_lower_bound

<< ".." << newest_map;

out << ")";

}

};

cluster_osdmap_trim_lower_bound = 24807

newest_map = 25307

reproduce

1. # cat start-cluster.sh

#!/bin/sh

set -x

rm -rf dev ceph.conf out

MON=1 MGR=1 OSD=1 FS=0 MDS=0 RGW=0 ../src/vstart.sh -n -l -X -b --msgr2 \

--bluestore-devs /dev/disk/by-id/ata-ST10000NM0196-2AA131_ZA29A049 \

--bluestore-db-devs /dev/disk/by-id/ata-INTEL_SSDSC2KG480G8_BTYG909204YN480BGN-part3 \

--bluestore-wal-devs /dev/disk/by-id/ata-INTEL_SSDSC2KG480G8_BTYG909204YN480BGN-part1

2. # ../src/vstart.sh --inc-osd

3. # crush tree

osd.0 in root default-root-0

osd.1 in root default-root-1

4. # create pool

create test-pool-0 with default-root-0 root. (single replicas)

create test-pool-1 with default-root-1 root. (single replicas)

5. # kill -9 <osd.0.pid>

6. # change osdmap

for i in {1..10000}; do

ceph osd pool application rm "test-pool-1" rgw "abc"

ceph osd pool application set "test-pool-1" rgw "abc" "efg"

ceph osd dump | head -n 1

done

7. # record osdmap info

ceph osd dump | head

ceph report | grep osdmap

ceph report | jq .osdmap_clean_epochs

ceph tell osd.1 status

8. # start osd.0

init-ceph start osd.0

9. # record osdmap info

ceph tell osd.0 status

ceph tell osd.1 status

ceph osd dump | head

ceph report | grep osdmap

ceph report | jq .osdmap_clean_epochs

10. # ceph daemon osd.0 config set debug_osd 20

ceph daemon osd.1 config set debug_osd 20

ceph daemon osd.0 config set osd_map_trim_min_interval 60

ceph daemon osd.1 config set osd_map_trim_min_interval 60

11. tail -f out/osd.0.log | grep trim_maps

Files

Updated by jianwei zhang 4 months ago

- 2023-12-25 ceph osd down osd.1 trigger osdmap change

A temporary solution is to down an osd, triggering osdmap changes,

Let mon update cluster_osdmap_trim_lower_bound = first_committed epoch

osd.0.log

```

2023-12-25T16:27:34.178+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T16:28:36.027+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T16:29:37.190+0800 7f9d5dfa3700 5 osd.0 25307 trim_maps: min=21594 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=21594 service.map_cache.cached_key_lower_bound=25258

2023-12-25T16:30:02.824+0800 7f9d50d7a700 5 osd.0 25307 trim_maps: min=24807 oldest_map=21594 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25258

2023-12-25T16:30:04.182+0800 7f9d50d7a700 5 osd.0 25308 trim_maps: min=24807 oldest_map=21609 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25258

2023-12-25T16:30:06.448+0800 7f9d50d7a700 5 osd.0 25309 trim_maps: min=24807 oldest_map=21624 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25258

2023-12-25T16:31:08.162+0800 7f9d5dfa3700 5 osd.0 25311 trim_maps: min=24807 oldest_map=21639 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25262

2023-12-25T16:32:10.047+0800 7f9d5dfa3700 5 osd.0 25311 trim_maps: min=24807 oldest_map=21654 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25262

2023-12-25T16:33:11.052+0800 7f9d5dfa3700 5 osd.0 25311 trim_maps: min=24807 oldest_map=21669 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25262

2023-12-25T16:34:12.234+0800 7f9d5dfa3700 5 osd.0 25311 trim_maps: min=24807 oldest_map=21684 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25262

2023-12-25T16:35:14.175+0800 7f9d5dfa3700 5 osd.0 25311 trim_maps: min=24807 oldest_map=21699 superblock.cluster_osdmap_trim_lower_bound=24807 service.map_cache.cached_key_lower_bound=25262

```

Updated by jianwei zhang 4 months ago

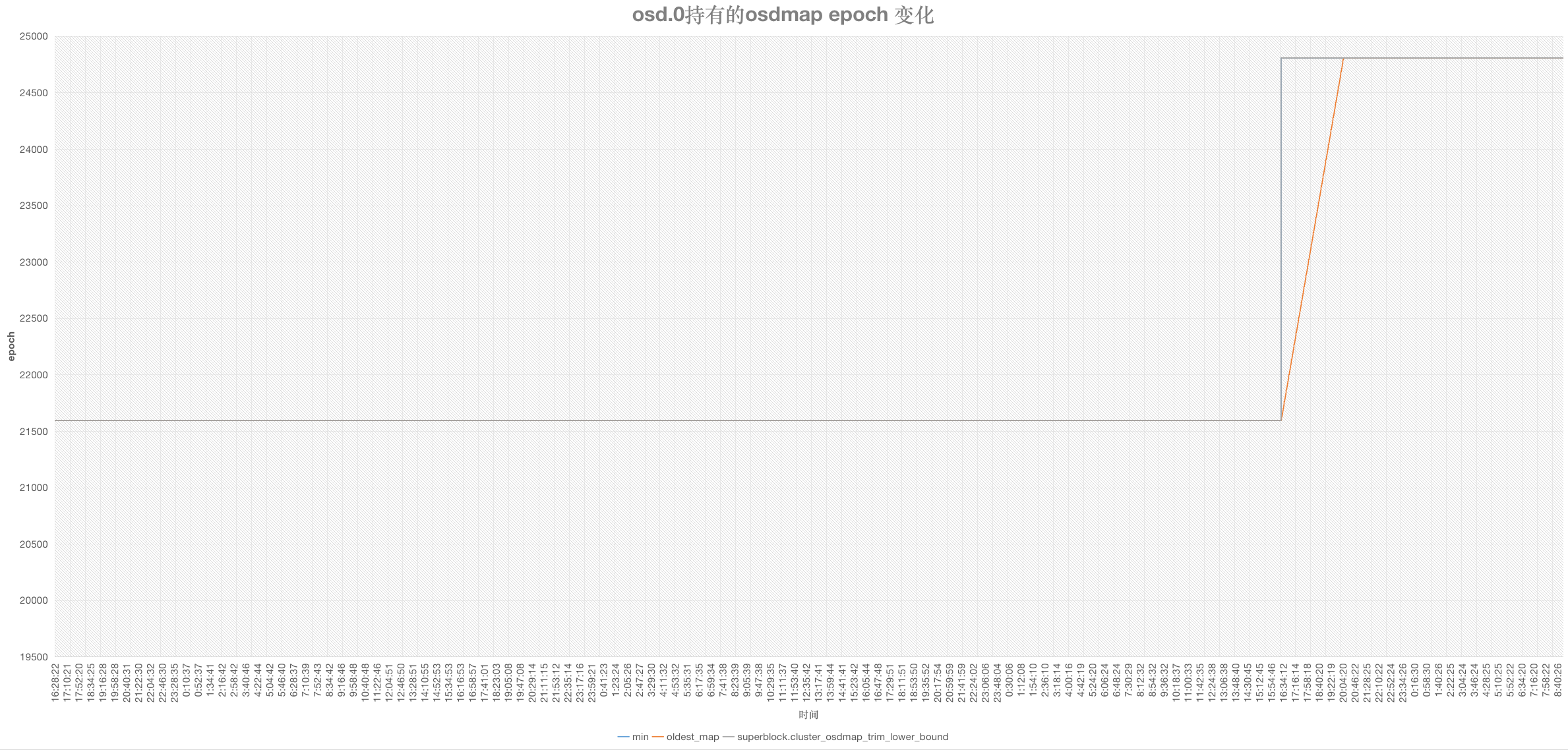

- File osd0-osdmap-change.png osd0-osdmap-change.png added

After 12 hours, observe the number of osdmaps of osd,0:

[root@zjw-cmain-dev build]# ceph daemon osd.0 status

{

"cluster_fsid": "713551e4-fec5-453b-ac9e-ae0716d235cb",

"osd_fsid": "bc068df8-0acb-4164-8262-3813b40ce736",

"whoami": 0,

"state": "active",

"maps": "[24807~505]",

"cluster_osdmap_trim_lower_bound": 24807,

"num_pgs": 128

}

[root@zjw-cmain-dev build]# ceph report | grep osdmap_

report 2402830739

"osdmap_clean_epochs": {

"osdmap_first_committed": 24807,

"osdmap_last_committed": 25311,

[root@zjw-cmain-dev build]# ceph report | jq .osdmap_clean_epochs

report 744581619

{

"min_last_epoch_clean": 25309,

"last_epoch_clean": {

"per_pool": [

{

"poolid": 1,

"floor": 25309

},

{

"poolid": 2,

"floor": 25311

}

]

},

"osd_epochs": [

{

"id": 0,

"epoch": 25311

},

{

"id": 1,

"epoch": 25311

}

]

}

The line chart of the picture shows:

- min: Indicates that the OSD::trim_maps function selects the epoch of the minimum boundary.

- superblock.cluster_osdmap_trim_lower_bound: Indicates the maximum osdmap trim boundary of the cluster obtained by osd.0 when receiving the MOSDMap message.

- oldest_map: indicates the oldest osdmap epoch held by osd.0

- Among them, the polylines of min/superblock.cluster_osdmap_trim_lower_bound are completely overlapping

The reason why osd.0 min/superblock.cluster_osdmap_trim_lower_bound increased from epoch 21594 to 24807

- Executing ceph osd down osd.1 near this point in epoch 24807 triggered the osdmap change

- osd.0 received the MOSDMap message sent by mon, in which cluster_osdmap_trim_lower_bound was updated to 24807

- So the OSD::trim_maps function of osd.0 got 24807 when getting min

The reason why osd.0 oldest_map has a slash change:

- Due to the this pr https://github.com/ceph/ceph/pull/54686, periodic OSD::trim_maps calls are added in OSD::tick to trim osdmap epochs in the [oldest_map,superblock.cluster_osdmap_trim_lower_bound) interval

- Call OSD::trim_maps every 1 minute

- Trim 30 object transactions and 15 osdmaps each time

- So, there is a relatively slow trend of cropping

in conclusion:

- Currently even though periodic OSD::trim_maps calls are added to OSD::tick,

- In some scenarios, thousands of osdmaps held by ceph-osd that should be cropped still cannot be cropped in time.

- You can only trigger clipping until the next time osdmap changes.

- The reason:

(1) ceph-osd’s superblock.cluster_osdmap_trim_lower_bound can only rely on the cluster_osdmap_trim_lower_bound parameter carried in the MOSDMap message;

(2) ceph-mon trim osdmap is min_last_epoch_clean reported periodically by ceph-osd through send_beacon, and this report is one-way ceph-osd ==> ceph-mon,

(3) If osdmap has not changed, even if all pg are in active+clean state, and ceph-mon has completed trim osdmap to a new boundary, since we have no change in osdmap epoch after ceph-mon trim osdmap, there is no change in osdmap epoch. It is impossible to notify ceph-osd in time that the current cluster_osdmap_trim_lower_bound has been updated, and ceph-osd is still holding the old osdmap.

Possible solutions:

After ceph-mon completes trim osdmap, update the osdmap epoch and spread it to all ceph-osd through MOSDMap.

After ceph-osd obtains the new cluster_osdmap_trim_lower_bound, it trims it periodically.

Updated by jianwei zhang 4 months ago

Solution 1:

Can we add a new cluster_osdmap_trim_lower_bound member variable in OSDMap?

Whenever Mon completes the trimming of OSDMap,

it adds another OSDMap change, that is, cluster_osdmap_trim_lower_bound = get_first_committed() and epoch + 1,

and then passes it to each osd.

Updated by jianwei zhang 4 months ago

Solution 2:

Rely on MOSDBeacon and MOSDMap message interaction to update the superblock.cluster_osdmap_trim_lower_bound of each ceph-osd。

(1) When ceph-osd sends_beacon to ceph-mon OSD::send_beacon, add the superblock.cluster_osdmap_trim_lower_bound variable of the current osd to MOSDBeacon and send it to ceph-mon together;

(2) After ceph-mon receives MOSDBeacon, if MOSDBeacon.cluster_osdmap_trim_lower_bound < get_first_committed(), then send an empty MOSDMap to the ceph-osd with the latest cluster_osdmap_trim_lower_bound

(3) After ceph-osd receives MOSDMap, if MOSDMap.cluster_osdmap_trim_lower_bound < superblock.cluster_osdmap_trim_lower_bound, update superblock.cluster_osdmap_trim_lower_bound

(4) In OSD::tick of ceph-osd, you can trim osdmap periodically

Updated by Matan Breizman 4 months ago

- Status changed from New to Need More Info

- Assignee set to Matan Breizman

jianwei zhang wrote:

Solution 1:

Can we add a new cluster_osdmap_trim_lower_bound member variable in OSDMap?Whenever Mon completes the trimming of OSDMap,

it adds another OSDMap change, that is, cluster_osdmap_trim_lower_bound = get_first_committed() and epoch + 1,

and then passes it to each osd.

IIUC, this is already true, See `OSDMonitor::build_latest_full` or OSDMonitor::build_incremental`OSDMonitor::build_incremental`:

m->cluster_osdmap_trim_lower_bound = get_first_committed();

Solution 2:

Rely on MOSDBeacon and MOSDMap message interaction to update the superblock.cluster_osdmap_trim_lower_bound of each ceph-osd。

...

After ceph-osd receives MOSDMap, if MOSDMap.cluster_osdmap_trim_lower_bound < superblock.cluster_osdmap_trim_lower_bound, update superblock.cluster_osdmap_trim_lower_bound

See OSD::handle_osd_map:

if (superblock.cluster_osdmap_trim_lower_bound < m->cluster_osdmap_trim_lower_bound) {

superblock.cluster_osdmap_trim_lower_bound = m->cluster_osdmap_trim_lower_bound;

I don't yet fully understand what is the bug here, trimming factors in multiple config options that affect the triggering trim_maps. Some of the key ones are: `osd_beacon_report_interval`, `paxos_service_trim_min` and `mon_min_osdmap_epochs`.

Reducing OSD beacon intervals and mon's OSDMap related options will also decrease the "long time" of no calls to trim_maps.

Please try these options and let me know if you still experience any issues.

Updated by jianwei zhang 4 months ago

The BUG is:

(1) ceph-mon has completed osdmap trim,

(2) But in a scenario where osdmap has not changed for a long time,

1> ceph-osd cannot receive MOSDMap.cluster_osdmap_trim_lower_bound,

2> There is no way to update superblock.cluster_osdmap_trim_lower_bound,

3> Eventually ceph-osd cannot perform OSD::trim_maps

Updated by jianwei zhang 4 months ago

Hi matan-B,

(1)ceph-mon has completed osdmap trim

# ceph report | grep osdmap_

report 974124877

"osdmap_clean_epochs": {

"osdmap_first_committed": 24807,

"osdmap_last_committed": 25307, //25307 - 24807 = 500 osdmaps

(2)But in a scenario where osdmap has not changed for a long time,

1> ceph-osd cannot receive MOSDMap.cluster_osdmap_trim_lower_bound,

2> There is no way to update superblock.cluster_osdmap_trim_lower_bound,

3> Eventually ceph-osd cannot perform OSD::trim_maps

[root@zjw-cmain-dev build]# ceph tell osd.0 status

{

"cluster_fsid": "713551e4-fec5-453b-ac9e-ae0716d235cb",

"osd_fsid": "bc068df8-0acb-4164-8262-3813b40ce736",

"whoami": 0,

"state": "active",

"maps": "[21594~3714]",

"cluster_osdmap_trim_lower_bound": 21594,

"num_pgs": 128

}

[root@zjw-cmain-dev build]# ceph tell osd.1 status

{

"cluster_fsid": "713551e4-fec5-453b-ac9e-ae0716d235cb",

"osd_fsid": "a62eb046-59cb-40f7-bf63-82c228ef5e30",

"whoami": 1,

"state": "active",

"maps": "[21594~3714]", // osd store 3714 osdmaps, more than 500 osdmaps

"cluster_osdmap_trim_lower_bound": 21594, //but osd superblock.tlb still is 21594, not is 24807

"num_pgs": 128

}

Updated by Matan Breizman 4 months ago

- Tracker changed from Bug to Feature

- Status changed from Need More Info to New

- Priority changed from Normal to Low

I think that this is more of a RFE than a bug. If I could suggest a rephrase:

OSD (trim_maps): Missing periodic MOSDMap updates from monitor

OSDMap trimming lower bound is originated from the monitor's `osdmap_first_committed` epoch.

This value is shared from the monitor to the OSDs through OSDMap messages (MOSDMaps).

In the scenario where there is no OSDMap related activity, the monitor may have new `osdmap_first_committed` epoch without informing the OSDs about it.

As a result the OSDSuperblock::cluster_osdmap_trim_lower_bound (mon's `osdmap_first_committed`)

will remain "stale" until the next MOSDMap shared from the monitor. Since the tlb is stale, trim_maps calls won't actually trim any maps.

To resolve this scenario, we can enhance the monitor's behavior to share a MOSDMap with the new cluster_osdmap_trim_lower_bound

regardless of any MOSDMap activity.

The way I see it, we can reply to the OSD using with mon's latest map in one of the following options (OSDMonitor::send_latest):

- Send the latest mon MOSDMap periodically (every n OSD beacons? / ticks?)

- Add the OSD's local cluster_osdmap_trim_lower_bound to MOSDBeacon to detect "stale" ones and share latest accordingly.

- Re-use MOSDFull logic, once every `osd_mon_report_interval` the OSD asks the monitor for full update if needed (See OSD::send_full_update()).

(Enhance existing implementation?)

Thank you for the report jianwei. Let me know if I understood correctly.

Updated by jianwei zhang 4 months ago

Yes, your understanding is completely correct.

Let’s think about possible solutions together,

To completely strengthen the logic related to mon/osd osdmap trim,

Avoid expired osdmaps from occupying a large amount of storage space for a long time.

Thank you

Updated by Matan Breizman 4 months ago

- Subject changed from OSD trim_maps - osdmap not change for a long time and not trigger trim_maps in ceph-osd to OSD (trim_maps): Missing periodic MOSDMap updates from monitor

- Description updated (diff)