Bug #63013

openI/O is hang while I try to write to a file with two processes, maybe due to metadata corruption

0%

Description

I have 3 clients mounted to a cephfs.

There is a file named 'GOKU_yplzmcdzkl_1692173797.mym', and I use a python script to write data to it by two concurrent processes with the following command:

'os.open('GOKU_yplzmcdzkl_1692173797.mym',1)'.

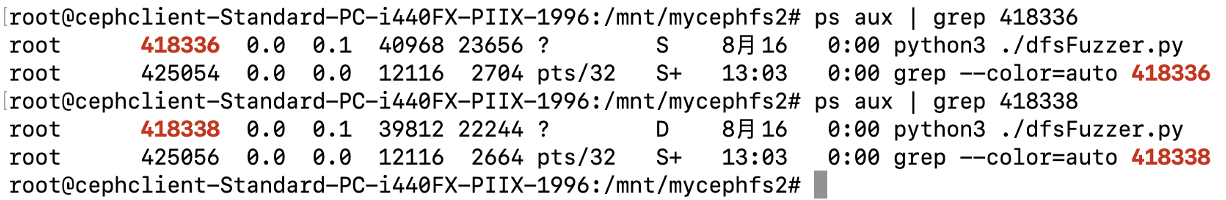

The processes hang and the writing is stuck too. I tried to open the file with vim and the vim also hangs. The ps aux command shows that the two processes' states are S and D.

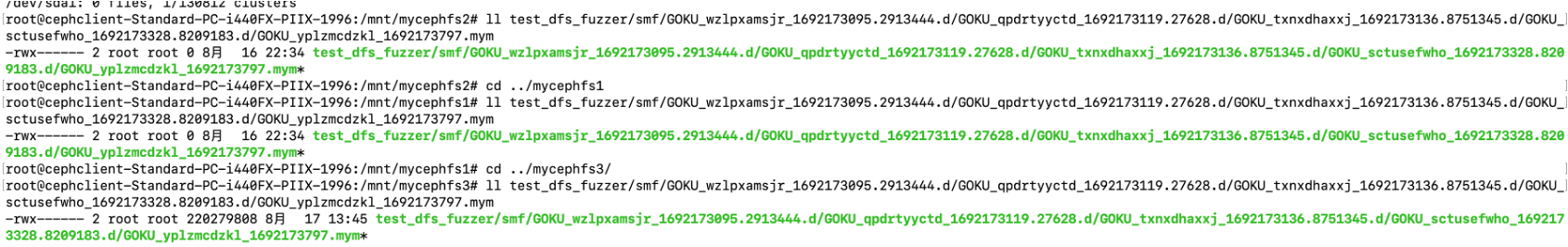

Besides, I find that the size of that file is 0. Afterwards, I try to check the status of that file by each client, and the first two clients show that the file has a size of 0, while when I check the third client, using the ll command, it shows the file has a size of 210MB. And after that ll command, the writing is not stuck.

By the way, this is the same to the issue: https://tracker.ceph.com/issues/62480.

Files

Updated by Venky Shankar 7 months ago

fuchen ma wrote:

I have 3 clients mounted to a cephfs.

There is a file named 'GOKU_yplzmcdzkl_1692173797.mym', and I use a python script to write data to it by two concurrent processes with the following command:

'os.open('GOKU_yplzmcdzkl_1692173797.mym',1)'.

The processes hang and the writing is stuck too. I tried to open the file with vim and the vim also hangs. The ps aux command shows that the two processes' states are S and D.

Besides, I find that the size of that file is 0. Afterwards, I try to check the status of that file by each client, and the first two clients show that the file has a size of 0, while when I check the third client, using the ll command, it shows the file has a size of 210MB. And after that ll command, the writing is not stuck.By the way, this is the same to the issue: https://tracker.ceph.com/issues/62480.

The above tracker mentioned was filed under ceph project, so it did not show up in out weekly bug scrub.

We need a bit more information to debug this - mds/client logs to start with. Do you have those? Anything interesting in the cluster - network issue, OSD failing, etc..?

Updated by Venky Shankar 7 months ago

- Related to Bug #62480: I/O is hang while I try to write to a file with two processes, maybe due to metadata corruption added

Updated by Venky Shankar 7 months ago

- Status changed from Triaged to Need More Info

Updated by fuchen ma 7 months ago

Venky Shankar wrote:

The above tracker mentioned was filed under ceph project, so it did not show up in out weekly bug scrub.

We need a bit more information to debug this - mds/client logs to start with. Do you have those? Anything interesting in the cluster - network issue, OSD failing, etc..?

I think there are no network issues and OSD failures.

I will try to reproduce this case (which will always occur with about 2-4 hours under my application), and store all the logs. Could you please tell me what logs are needed exactly and where to find them?

Updated by Venky Shankar 7 months ago

fuchen ma wrote:

Venky Shankar wrote:

The above tracker mentioned was filed under ceph project, so it did not show up in out weekly bug scrub.

We need a bit more information to debug this - mds/client logs to start with. Do you have those? Anything interesting in the cluster - network issue, OSD failing, etc..?

I think there are no network issues and OSD failures.

I will try to reproduce this case (which will always occur with about 2-4 hours under my application), and store all the logs. Could you please tell me what logs are needed exactly and where to find them?

Thank you. What ceph versions are the MDS and the client running? If you are using the kernel driver, what's the kernel version?

The client (if using the user-space driver) and the mds debug logs would be required. The screenshots you attached show not only the size, but also the mtime for the two clients different than the third client. When you ran `ll`, it possibly kicked the (file)lock state in the MDS somehow and enabled I/O to make progress. I do not have an explanation. If you could reproduce and share the logs that would be ideal.

Updated by fuchen ma 6 months ago

Venky Shankar wrote:

Thank you. What ceph versions are the MDS and the client running? If you are using the kernel driver, what's the kernel version?

The client (if using the user-space driver) and the mds debug logs would be required. The screenshots you attached show not only the size, but also the mtime for the two clients different than the third client. When you ran `ll`, it possibly kicked the (file)lock state in the MDS somehow and enabled I/O to make progress. I do not have an explanation. If you could reproduce and share the logs that would be ideal.

Sorry for the late reply. The version of ceph is 15.2.17 (8a82819d84cf884bd39c17e3236e0632ac146dc4) octopus (stable). I'm using fuse to mount my client. The client has very few logs (only records the executed file operations). I'm using cephadm to deploy the cluster, so how should I export the log related to mds? Could you please tell me the command? The issue can be reproduced steadily, so I can attached the log in my next comment.

Updated by Venky Shankar 6 months ago

fuchen ma wrote:

Venky Shankar wrote:

Thank you. What ceph versions are the MDS and the client running? If you are using the kernel driver, what's the kernel version?

The client (if using the user-space driver) and the mds debug logs would be required. The screenshots you attached show not only the size, but also the mtime for the two clients different than the third client. When you ran `ll`, it possibly kicked the (file)lock state in the MDS somehow and enabled I/O to make progress. I do not have an explanation. If you could reproduce and share the logs that would be ideal.

Sorry for the late reply. The version of ceph is 15.2.17 (8a82819d84cf884bd39c17e3236e0632ac146dc4) octopus (stable).

Could you use a recent ceph version and see if you can reproduce it there?

I'm using fuse to mount my client. The client has very few logs (only records the executed file operations). I'm using cephadm to deploy the cluster, so how should I export the log related to mds? Could you please tell me the command? The issue can be reproduced steadily, so I can attached the log in my next comment.

You'd need debug_mds=20 and debug_client=20. This can be set using:

ceph config set mds debug_mds 20

ceph config set client debug_client 20

Updated by Venky Shankar 6 months ago

- Category set to Correctness/Safety

- Assignee set to Venky Shankar

fuchen ma wrote:

Can I change the version of ceph by using cephadm?

I believe using

cephadm shell -- ceph ...

Updated by fuchen ma 6 months ago

Still don't know how to change the version of CephFS with cephadm. cephadm shell only shows the versions of ceph-mon and ceph-mds, etc. However, in another cluster, I deployed ceph nodes manually without using the cephadm where the version of ceph is 18.0.0, I cannot reproduce this bug.

By the way, in the last time I reproduced the bug, Client 1 sends the request to append to a file named 'Eris_zqgazpuduo_1699843008.uiv', while client 2 renames the directory which contains the file and the client 3 wants to overwrite the file in parallel. It seems this is related to the read/write lock?

Updated by Venky Shankar 6 months ago

fuchen ma wrote:

Still don't know how to change the version of CephFS with cephadm. cephadm shell only shows the versions of ceph-mon and ceph-mds, etc. However, in another cluster, I deployed ceph nodes manually without using the cephadm where the version of ceph is 18.0.0, I cannot reproduce this bug.

By the way, in the last time I reproduced the bug, Client 1 sends the request to append to a file named 'Eris_zqgazpuduo_1699843008.uiv', while client 2 renames the directory which contains the file and the client 3 wants to overwrite the file in parallel. It seems this is related to the read/write lock?

The bug is likely related to file(lock) in the MDS. There have been some fixes around that in later ceph versions. You could try using the latest supported ceph releases (preferably, pacific+) and see if you reproduce it there.