Bug #46327

closedcephadm: nfs daemons share the same config object

0%

Description

If we create a NFS service with multiple instances, those instance share the same rados object as the configuration source.

E.g.

# cat /tmp/nfs.yml

service_type: nfs

service_id: sesdev_nfs_deployment

placement:

hosts:

- 'mgr0'

- 'osd0'

spec:

pool: rbd

namespace: nfs

# ceph orch apply -i /tmp/nfs.yml

Scheduled nfs.sesdev_nfs_deployment update...

# rados ls -p rbd --all

2020-07-02T07:48:22.637+0000 7fecad052b80 -1 WARNING: all dangerous and experimental features are enabled.

2020-07-02T07:48:22.637+0000 7fecad052b80 -1 WARNING: all dangerous and experimental features are enabled.

2020-07-02T07:48:22.637+0000 7fecad052b80 -1 WARNING: all dangerous and experimental features are enabled.

nfs grace

nfs rec-0000000000000003:nfs.sesdev_nfs_deployment.mgr0

nfs rec-0000000000000003:nfs.sesdev_nfs_deployment.osd0

nfs conf-nfs.sesdev_nfs_deployment <--- this object is shared by all daemons

# podman exec ceph-a83a8c75-ee83-4ada-8881-fc01bdca496b-nfs.sesdev_nfs_deployment.osd0 cat /etc/ganesha/ganesha.conf

<...>

RADOS_URLS {

UserId = "nfs.sesdev_nfs_deployment.osd0";

watch_url = "rados://rbd/nfs/conf-nfs.sesdev_nfs_deployment";

}

# podman exec ceph-a83a8c75-ee83-4ada-8881-fc01bdca496b-nfs.sesdev_nfs_deployment.mgr0 cat /etc/ganesha/ganesha.conf

<...>

RADOS_URLS {

UserId = "nfs.sesdev_nfs_deployment.mgr0";

watch_url = "rados://rbd/nfs/conf-nfs.sesdev_nfs_deployment";

}

Each daemon should have its own configuration object. Otherwise, all daemons are going to share the same exports.

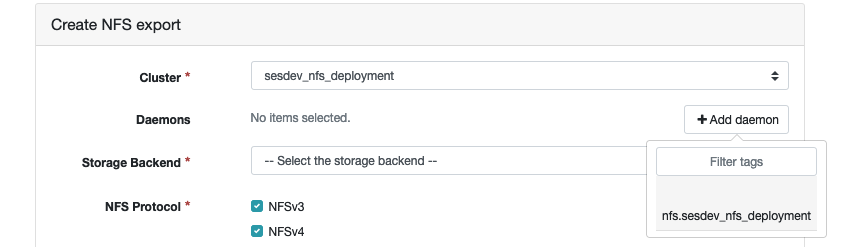

The Dashboard determines daemon instances by enumerating `conf-xxx` objects. If there is only one config, the user can only choose it (but all daemons share the same config actually).

Files

Updated by Varsha Rao almost 4 years ago

- Status changed from New to Rejected

Not a bug, by design all daemons within a cluster will share the same config object.

Updated by Kiefer Chang almost 4 years ago

This causes a regression in the Dashboard. RADOS objects are designed to work with multiple daemons and each daemon has its won configuration before, please see https://docs.ceph.com/docs/nautilus/mgr/dashboard/#nfs-ganesha-management

I suggest letting each daemon have its own configuration object. If in octopus all daemons share the same config, then this introduces some concerns:

- How to migrate daemons in the previous deployments, if each daemon contains different exports.

- The dashboard's Ganesha feature will be tightly coupled with the Orchestrator, the user that deploys Ganesha daemons with other tools needs to follow orchestrator's way.

- Daemons deployed with Rook doesn't share configs.

The slide that describes the regression: https://docs.google.com/presentation/d/1HwwDPXfAG_AJRLItylRlx_0H-JrFNw9sut7Jpm1QPg4/edit?usp=sharing

Updated by Lenz Grimmer almost 4 years ago

- Status changed from Rejected to New

- Regression changed from No to Yes

Reopening, as this change introduces a regression and will potentially break upgrades.

Updated by Patrick Donnelly almost 4 years ago

- Affected Versions v15.2.4 added

- Affected Versions deleted (

v15.2.5, v16.0.0)

Hi Kiefer, I left some similar comments on your slide deck but also will say here:

Kiefer Chang wrote:

This causes a regression in the Dashboard. RADOS objects are designed to work with multiple daemons and each daemon has its won configuration before, please see https://docs.ceph.com/docs/nautilus/mgr/dashboard/#nfs-ganesha-management

I suggest letting each daemon have its own configuration object. If in octopus all daemons share the same config, then this introduces some concerns:

- How to migrate daemons in the previous deployments, if each daemon contains different exports.

"All daemons share the same config" is not correct. We now have NFS-Ganesha clusters. Each cluster shares the same common config and set of exports.

I think the migration path is to convert the dashboard created NFS-Ganesha daemons (Nautilus) to the volumes plugin (src/pybind/mgr/volumes/nfs) to individual NFS-Ganesha clusters. So, 1-1 mapping.

- The dashboard's Ganesha feature will be tightly coupled with the Orchestrator, the user that deploys Ganesha daemons with other tools needs to follow orchestrator's way.

- Daemons deployed with Rook doesn't share configs.

This will soon change in a follow-up fix by Varsha.

The slide that describes the regression: https://docs.google.com/presentation/d/1HwwDPXfAG_AJRLItylRlx_0H-JrFNw9sut7Jpm1QPg4/edit?usp=sharing

Updated by Michael Fritch almost 4 years ago

Patrick Donnelly wrote:

Hi Kiefer, I left some similar comments on your slide deck but also will say here:

I also left a few comments on the slide deck.

Kiefer Chang wrote:

This causes a regression in the Dashboard. RADOS objects are designed to work with multiple daemons and each daemon has its won configuration before, please see https://docs.ceph.com/docs/nautilus/mgr/dashboard/#nfs-ganesha-management

I suggest letting each daemon have its own configuration object. If in octopus all daemons share the same config, then this introduces some concerns:

- How to migrate daemons in the previous deployments, if each daemon contains different exports."All daemons share the same config" is not correct. We now have NFS-Ganesha clusters. Each cluster shares the same common config and set of exports.

The "bootstrap" config for the container image differs, but the RADOS common config is shared for the entire NFS service defined by the Orchestrator.

I think the migration path is to convert the dashboard created NFS-Ganesha daemons (Nautilus) to the volumes plugin (src/pybind/mgr/volumes/nfs) to individual NFS-Ganesha clusters. So, 1-1 mapping.

export files can likely remain, but the new common config could simply be a union of each of the per-daemon configs?

also, I think it would make sense to deprecate the dashboard logic in favor of using the logic contained in the volumes plugin ...

- The dashboard's Ganesha feature will be tightly coupled with the Orchestrator, the user that deploys Ganesha daemons with other tools needs to follow orchestrator's way.

- Daemons deployed with Rook doesn't share configs.This will soon change in a follow-up fix by Varsha.

awesome \o/

Updated by Patrick Donnelly almost 4 years ago

Michael Fritch wrote:

Kiefer Chang wrote:

This causes a regression in the Dashboard. RADOS objects are designed to work with multiple daemons and each daemon has its won configuration before, please see https://docs.ceph.com/docs/nautilus/mgr/dashboard/#nfs-ganesha-management

I suggest letting each daemon have its own configuration object. If in octopus all daemons share the same config, then this introduces some concerns:

- How to migrate daemons in the previous deployments, if each daemon contains different exports."All daemons share the same config" is not correct. We now have NFS-Ganesha clusters. Each cluster shares the same common config and set of exports.

The "bootstrap" config for the container image differs, but the RADOS common config is shared for the entire NFS service defined by the Orchestrator.

The RADOS common config is per-NFS cluster. The pool namespace in the nfs-ganesha pool distinguishes the clusters.

I've also asked Varsha to simplify the bootstrap config defined by the orchestrator. I believe we should keep the bootstrap config as simple as possible so higher level abstractions (i.e. the volumes/nfs code) can have better control and the orchestrator's nfs service is more general (can be applied to rgw/rbd).

Updated by Michael Fritch almost 4 years ago

Patrick Donnelly wrote:

Michael Fritch wrote:

Kiefer Chang wrote:

This causes a regression in the Dashboard. RADOS objects are designed to work with multiple daemons and each daemon has its won configuration before, please see https://docs.ceph.com/docs/nautilus/mgr/dashboard/#nfs-ganesha-management

I suggest letting each daemon have its own configuration object. If in octopus all daemons share the same config, then this introduces some concerns:

- How to migrate daemons in the previous deployments, if each daemon contains different exports."All daemons share the same config" is not correct. We now have NFS-Ganesha clusters. Each cluster shares the same common config and set of exports.

The "bootstrap" config for the container image differs, but the RADOS common config is shared for the entire NFS service defined by the Orchestrator.

The RADOS common config is per-NFS cluster. The pool namespace in the nfs-ganesha pool distinguishes the clusters.

I've also asked Varsha to simplify the bootstrap config defined by the orchestrator. I believe we should keep the bootstrap config as simple as possible so higher level abstractions (i.e. the volumes/nfs code) can have better control and the orchestrator's nfs service is more general (can be applied to rgw/rbd).

Generally agree this is the better approach, but we need to keep in mind that achieving this would require changes to upstream Ganesha:

- the first config block defined in the bootstrap config takes precedence over any duplicate block found in the common config

- the FSAL block embeds the config items for the RADOS user etc.

- these blocks are needed in the bootstrap config to access the RADOS common config during container start..

Updated by Kiefer Chang almost 4 years ago

Updated by Sebastian Wagner almost 4 years ago

- Related to Feature #46493: mgr/dashboard: integrate Dashboard with mgr/nfs module interface added

Updated by Sebastian Wagner almost 4 years ago

- Related to Bug #46492: mgr/dashboard: adapt NFS-Ganesha design change in Octopus (daemons -> services) added

Updated by Sebastian Wagner over 3 years ago

- Status changed from New to Won't Fix

decided to migrate users instead.