Bug #42689

closednautilus mon/mgr: ceph status:pool number display is not right

0%

Description

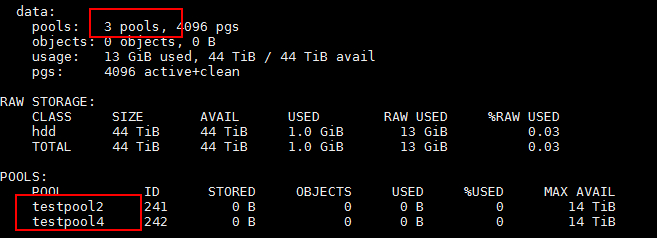

When I create a pool, and then I remove it. The pool number display is not right in ceph status dumpinfo.

At the same time, mgr pymodule dashboard show the rigth info.

I think paxos service between mon and mgr has bugs.

When I set mon debug log level to 20, some valuable info is got:

2019-11-07 15:09:11.300 ffff85715750 20 mon.test1@0(leader).mgrstat pending_digest:

"pool_stats": [

{

"poolid": 240,

"stat_sum": {

"num_bytes": 0,

"num_objects": 0,

"num_object_clones": 0,

"num_object_copies": 0,

"num_objects_missing_on_primary": 0,

"num_objects_missing": 0,

"num_objects_degraded": 0,

"num_objects_misplaced": 0,

"num_objects_unfound": 0,

"num_objects_dirty": 0,

"num_whiteouts": 0,

"num_read": 0,

"num_read_kb": 0,

"num_write": 0,

"num_write_kb": 0,

"num_scrub_errors": 0,

"num_shallow_scrub_errors": 0,

"num_deep_scrub_errors": 0,

"num_objects_recovered": 0,

"num_bytes_recovered": 0,

"num_keys_recovered": 0,

"num_objects_omap": 0,

"num_objects_hit_set_archive": 0,

"num_bytes_hit_set_archive": 0,

"num_flush": 0,

"num_flush_kb": 0,

"num_evict": 0,

"num_evict_kb": 0,

"num_promote": 0,

"num_flush_mode_high": 0,

"num_flush_mode_low": 0,

"num_evict_mode_some": 0,

"num_evict_mode_full": 0,

"num_objects_pinned": 0,

"num_legacy_snapsets": 0,

"num_large_omap_objects": 0,

"num_objects_manifest": 0,

"num_omap_bytes": 0,

"num_omap_keys": 0,

"num_objects_repaired": 0

},

"store_stats": {

"total": 0,

"available": 0,

"internally_reserved": 0,

"allocated": 0,

"data_stored": 0,

"data_compressed": 0,

"data_compressed_allocated": 0,

"data_compressed_original": 0,

"omap_allocated": 0,

"internal_metadata": 0

},

"log_size": 0,

"ondisk_log_size": 0,

"up": 0,

"acting": 0,

"num_store_stats": 0

},

{

"poolid": 241,

"num_pg": 2048,

"stat_sum": {

"num_bytes": 0,

"num_objects": 0,

"num_object_clones": 0,

"num_object_copies": 0,

"num_objects_missing_on_primary": 0,

"num_objects_missing": 0,

"num_objects_degraded": 0,

"num_objects_misplaced": 0,

"num_objects_unfound": 0,

"num_objects_dirty": 0,

"num_whiteouts": 0,

"num_read": 0,

"num_read_kb": 0,

"num_write": 0,

"num_write_kb": 0,

"num_scrub_errors": 0,

"num_shallow_scrub_errors": 0,

"num_deep_scrub_errors": 0,

"num_objects_recovered": 0,

"num_bytes_recovered": 0,

"num_keys_recovered": 0,

"num_objects_omap": 0,

"num_objects_hit_set_archive": 0,

"num_bytes_hit_set_archive": 0,

"num_flush": 0,

"num_flush_kb": 0,

"num_evict": 0,

"num_evict_kb": 0,

"num_promote": 0,

"num_flush_mode_high": 0,

"num_flush_mode_low": 0,

"num_evict_mode_some": 0,

"num_evict_mode_full": 0,

"num_objects_pinned": 0,

"num_legacy_snapsets": 0,

"num_large_omap_objects": 0,

"num_objects_manifest": 0,

"num_omap_bytes": 0,

"num_omap_keys": 0,

"num_objects_repaired": 0

},

"store_stats": {

"total": 0,

"available": 0,

"internally_reserved": 0,

"allocated": 0,

"data_stored": 0,

"data_compressed": 0,

"data_compressed_allocated": 0,

"data_compressed_original": 0,

"omap_allocated": 0,

"internal_metadata": 0

},

"log_size": 0,

"ondisk_log_size": 0,

"up": 4096,

"acting": 4096,

"num_store_stats": 4096

},

{

"poolid": 242,

"num_pg": 2048,

"stat_sum": {

"num_bytes": 0,

"num_objects": 0,

"num_object_clones": 0,

"num_object_copies": 0,

"num_objects_missing_on_primary": 0,

"num_objects_missing": 0,

"num_objects_degraded": 0,

"num_objects_misplaced": 0,

"num_objects_unfound": 0,

"num_objects_dirty": 0,

"num_whiteouts": 0,

"num_read": 0,

"num_read_kb": 0,

"num_write": 0,

"num_write_kb": 0,

"num_scrub_errors": 0,

"num_shallow_scrub_errors": 0,

"num_deep_scrub_errors": 0,

"num_objects_recovered": 0,

"num_bytes_recovered": 0,

"num_keys_recovered": 0,

"num_objects_omap": 0,

"num_objects_hit_set_archive": 0,

"num_bytes_hit_set_archive": 0,

"num_flush": 0,

"num_flush_kb": 0,

"num_evict": 0,

"num_evict_kb": 0,

"num_promote": 0,

"num_flush_mode_high": 0,

"num_flush_mode_low": 0,

"num_evict_mode_some": 0,

"num_evict_mode_full": 0,

"num_objects_pinned": 0,

"num_legacy_snapsets": 0,

"num_large_omap_objects": 0,

"num_objects_manifest": 0,

"num_omap_bytes": 0,

"num_omap_keys": 0,

"num_objects_repaired": 0

},

"store_stats": {

"total": 0,

"available": 0,

"internally_reserved": 0,

"allocated": 0,

"data_stored": 0,

"data_compressed": 0,

"data_compressed_allocated": 0,

"data_compressed_original": 0,

"omap_allocated": 0,

"internal_metadata": 0

},

"log_size": 0,

"ondisk_log_size": 0,

"up": 4096,

"acting": 4096,

"num_store_stats": 4096

}

],

Files

Updated by Neha Ojha over 4 years ago

- Related to Bug #40011: ceph -s shows wrong number of pools when pool was deleted added

Updated by Kefu Chai over 4 years ago

- Related to deleted (Bug #40011: ceph -s shows wrong number of pools when pool was deleted)

Updated by Kefu Chai over 4 years ago

- Is duplicate of Bug #40011: ceph -s shows wrong number of pools when pool was deleted added