Bug #37668

openAbortMultipartUpload causes data loss(NoSuchKey) when CompleteMultipartUpload request timeout

0%

Description

We have some Ceph clusters using RGW as S3/OSS service. Unfortunately, our users reported they had lost some data sometimes.

The detail is:

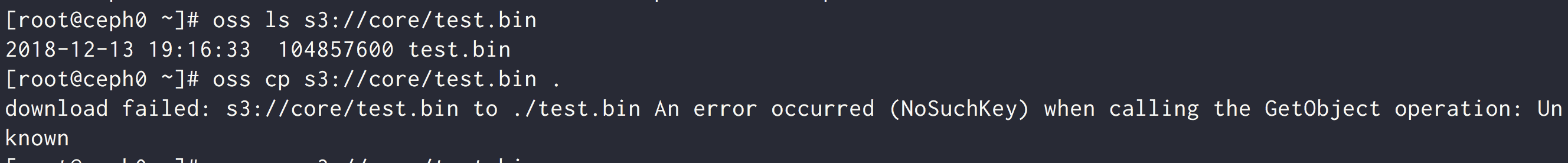

At the beginning, they uploaded these files successfully, and they could download these files correctly at that time, of cause;

After some time(maybe some hours, or a day, or even days), they CANNOT download these files again but they could list out them, and got a "NoSuchKey" error.

So we check the Ceph clusters and the behaviours of the client(awscli), analysed with the rgw server source code and its logs, finally we found this bug: When the cluster is slow(which causes the CompleteMultipartUpload request timeout), the awscli(or other) client sends a DELETE request(AbortMultipartUpload to clean up the upload, put the real data(multi parts) to gc. However, the upload job is actually finished when the CompleteMultipartUpload request return, so the head_obj with the filename is retained in the cluster and could be list out, but its data had gone away.

we use the newest Luminous v12.2.10 for mon/osd/rgw/mgr everything.

[bug reproduce]

1.Using awscli (or any other s3 client for sending request) to PUT a large(e.g. 100MB) file to rgw, make sure using MultipartUpload;

2.Waiting for the multi parts putting finished;

3.When the awscli client finished putting multi parts, it begins to send POST CompleteMultipartUpload requests to rgw server, every request has a 60 seconds to timeout and re-try 4 times(total 5 requests). We use Charles (or any other proxy software) to block these POST requests so it will send DELETE request later;

4.After 5 times POST CompleteMultipartUpload requests, the awscli client begins to send DELETErequests to rgw server, for its opinion, the upload is timeout and the uploaded file status is unknown, so clean up the upload;

5.We also use Charles block these DELETE requests so we can have time to unblock a POST CompleteMultipartUpload;

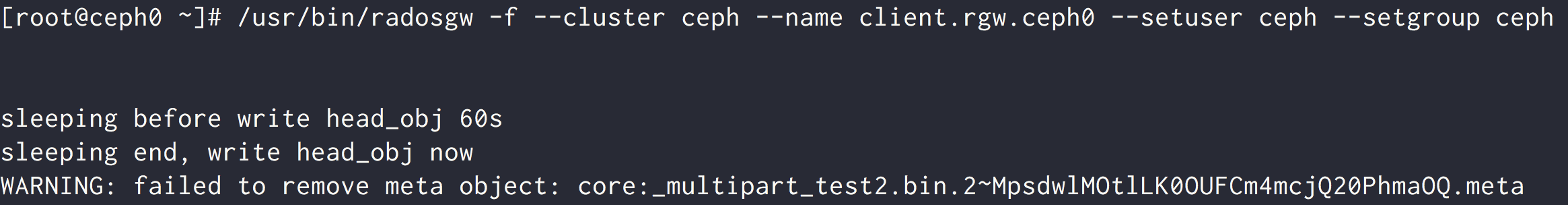

6.For 100% reproduce, we have a simple patch to let the rgw to simulate the cluster is very slow, sleeping in the CompleteMultipartUpload::execute():

[root@ceph1 ceph]# git diff

diff --git a/src/rgw/rgw_op.cc b/src/rgw/rgw_op.cc

index dce4549..8948f1f 100644

--- a/src/rgw/rgw_op.cc

+++ b/src/rgw/rgw_op.cc

@@ -5610,6 +5610,9 @@ void RGWCompleteMultipart::execute()

RGWObjectCtx& obj_ctx = *static_cast<RGWObjectCtx *>(s->obj_ctx);

+ std::cout << "sleeping before write head_obj 60s" << std::endl;

+ sleep(60);

+ std::cout << "sleeping end, write head_obj now" << std::endl;

obj_ctx.obj.set_atomic(target_obj);

RGWRados::Object op_target(store, s->bucket_info, *static_cast<RGWObjectCtx *>(s->obj_ctx), target_obj);

@@ -5637,6 +5640,7 @@ void RGWCompleteMultipart::execute()

} else {

ldout(store->ctx(), 0) << "WARNING: failed to remove object "

<< meta_obj << dendl;

+ std::cout << "WARNING: failed to remove meta object: " << meta_obj << std::endl;

}

}

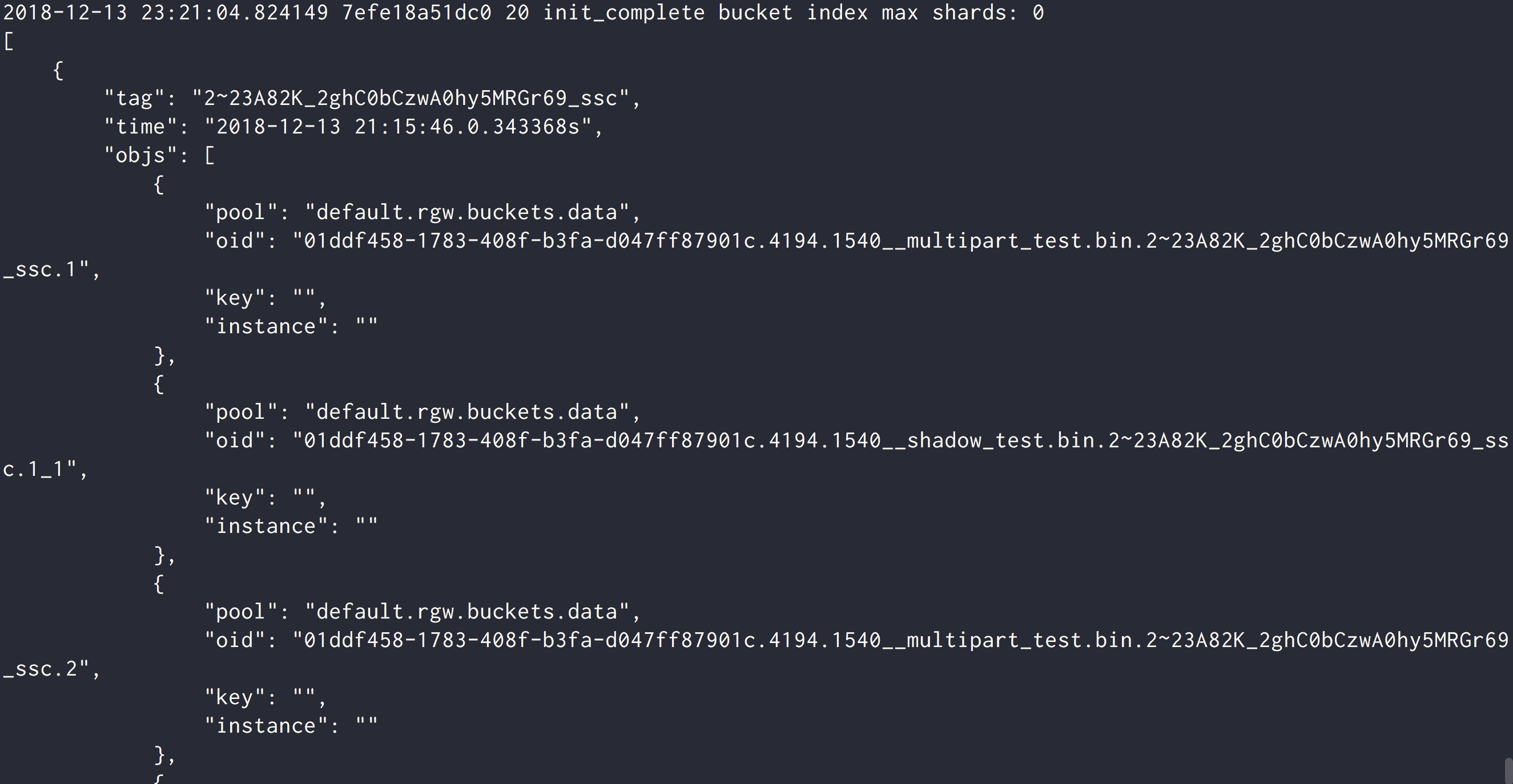

7. After any DELETErequest is sent and blocked, we unblock any POST CompleteMultipartUpload request, it will be processed to write the head_obj of the upload file after sleeping;

8. Unblock any DELETErequest, the response is got immediately with http return code 204(Success), and the data(multiparts) of the file has been put to GC, the meta_obj of the upload is removed;

9. When the POST CompleteMultipartUpload request wakes up, the head_obj is write successfully and return 200(OK) to the client;

10. You could download the file(even its data is already in GC) until its multiparts are processed in GC.

[Where is the bug?]

It's in RGWAbortMultipart::execute():

There is nothing to protect the meta_obj for race-condition (another thread is processing POST CompleteMultipartUpload request), the meta_obj and the data(multiparts) are deleted(put to GC) directly.

So after some time, you can see it ,but you cannot get it. _.

Files

Updated by Adam Emerson over 5 years ago

- File deleted (

rgw_ls_but_cannot_download_bug.pdf)

Updated by Adam Emerson over 5 years ago

Deleted private attachment at poster's request.

Updated by Princi Part about 5 years ago

OMG!!! Is there anybody to deal with this issue?!!

Updated by Thomas Kriechbaumer almost 5 years ago

Is there any progress on this?

I think we see similar errors - also with corrupt files that cause a 404 someway half-through a file download. We also used multipart uploads and experienced random timeouts - but I'm not aware that our custom S3 handling sent any AbortMultipartUpload (but it might have underneath without me knowing).

We currently have about 10 files which can be listed, show the correct size, but cannot be fully downloaded.

Updated by Matt Benjamin over 3 years ago

Possibly the abort path needs to also participate in the mp_serializer if we want to deterministically resolve

Updated by xiaobao wen 3 months ago

We also encountered a similar problem on v16.2.10.

The s3 index object exists, but it return 404 error when getting .

Rgw logs about the object uploaded:

645 /var/lib/rook/rook-ceph/log/ceph-client.rgw.os.dsglczutvqsgowpz.a.log.2.gz:2024-01-29T19:43:35.703+0000 7f0f3144a700 1 ====== req done req=0x7f0e37ec1600 op status=0 http_status=200 latency=66.566802979s ====== 646 /var/lib/rook/rook-ceph/log/ceph-client.rgw.os.dsglczutvqsgowpz.a.log.2.gz:2024-01-29T19:43:35.703+0000 7f0f3144a700 1 beast: 0x7f0e37ec1600: 178.16.170.50 - simulation-scenarios [29/Jan/2024:19:42:29.137 +0000] "POST /simulation-scenarios/tag_instance _1549404_1706518000581_1706518004389.bag?uploadId=2~HU9DLsY4WCavp7YOHYZfKBJ5fZvd0oV HTTP/1.1" 200 419 - "Boto3/1.23.10 Python/3.6.9 Linux/5.11.0-051100-generic Botocore/1.26.10" - latency=66.566802979s 1445 /var/lib/rook/rook-ceph/log/ceph-client.rgw.os.dsglczutvqsgowpz.a.log.2.gz:2024-01-29T19:43:33.432+0000 7f75b3036700 1 ====== req done req=0x7f7543cd2600 op status=0 http_status=204 latency=0.140001401s ====== 1446 /var/lib/rook/rook-ceph/log/ceph-client.rgw.os.dsglczutvqsgowpz.a.log.2.gz:2024-01-29T19:43:33.432+0000 7f75b3036700 1 beast: 0x7f7543cd2600: 178.16.170.50 - simulation-scenarios [29/Jan/2024:19:43:33.292 +0000] "DELETE /simulation-scenarios/tag_instan ce_1549404_1706518000581_1706518004389.bag?uploadId=2~HU9DLsY4WCavp7YOHYZfKBJ5fZvd0oV HTTP/1.1" 204 0 - "Boto3/1.23.10 Python/3.6.9 Linux/5.11.0-051100-generic Botocore/1.26.10" - latency=0.140001401s

Both `AbortMultipartUpload` request and `CompleteMultipartUpload` request were executed successfully.

Rgw data pool has only one object named "1db8bc57-cb5c-4fcf-a389-754c16ec70c2.268291379.2_tag_instance_1549404_1706518000581_1706518004389.bag"