Bug #16842

closedmds: replacement MDS crashes on InoTable release

0%

Description

ceph version 10.2.0-2638-gf7fc985

reproduce step:

1. new fs and start mds.a

2. start mds.b

3. kill mds.a

failed log of mds.b

71> 2016-07-28 02:12:59.648888 7f9d157fa700 1 mds.0.143 handle_mds_map state change up:replay -> up:reconnect

70> 2016-07-28 02:12:59.648902 7f9d157fa700 1 mds.0.143 reconnect_start 1 sessions

-69> 2016-07-28 02:12:59.648904 7f9d157fa700 1 mds.0.143 reopen_log

-68> 2016-07-28 02:12:59.648909 7f9d157fa700 1 mds.0.server reconnect_clients -

-67> 2016-07-28 02:12:59.648945 7f9d157fa700 5 mds.0.bal rebalance done

......

-1> 2016-07-28 02:13:46.851477 7f9d137f6700 1 mds.0.server reconnect gave up on client.104105 10.0.11.212:0/416314019

0> 2016-07-28 02:13:46.857379 7f9d137f6700 -1 ./include/interval_set.h: In function 'void interval_set<T>::insert(T, T, T*, T*) [with T = inodeno_t]' thread 7f9d137f6700 time 2016-07-28 02:13:46.851534

./include/interval_set.h: 355: FAILED assert(0)

ceph version 10.2.0-2638-gf7fc985 (f7fc9857fcf69492a8e781a8f2ca5799529b756e)

1: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x8b) [0x7f9d2033c5eb]

2: (interval_set<inodeno_t>::insert(inodeno_t, inodeno_t, inodeno_t*, inodeno_t*)+0x34a) [0x7f9d2017b3da]

3: (InoTable::project_release_ids(interval_set<inodeno_t>&)+0x1e5) [0x7f9d2017a6e5]

4: (Server::journal_close_session(Session*, int, Context*)+0x172) [0x7f9d1ffab9a2]

5: (Server::kill_session(Session*, Context*)+0xf7) [0x7f9d1ffadce7]

6: (Server::reconnect_tick()+0x138) [0x7f9d1ffaf5c8]

7: (MDSRankDispatcher::tick()+0x379) [0x7f9d1ff74569]

8: (Context::complete(int)+0x9) [0x7f9d1ff63129]

9: (SafeTimer::timer_thread()+0xec) [0x7f9d2032d46c]

10: (SafeTimerThread::entry()+0xd) [0x7f9d2032ee0d]

11: (()+0x7df5) [0x7f9d1f900df5]

12: (clone()+0x6d) [0x7f9d1da8e1ad]Files

Updated by Greg Farnum over 7 years ago

- Subject changed from Standby mds replace target rank mds failed in reconnect state to Replacement MDS crashes on InoTable release

- Category set to Code Hygiene

- Assignee deleted (

Greg Farnum) - Priority changed from High to Normal

This looks more complicated than that to reproduce. The code that's crashing is timing out a client connection that disappeared and is trying to clean up allocated resources.

Based on the backtrace, it looks like the MDS is passing in an interval set (for freeing preallocated inodes) that doesn't relate correctly to the existing free set? I'm a little confused just skimming through it.

Updated by John Spray over 7 years ago

Min Chen: can you describe the client part of how to reproduce this? What does the client have to be doing to reproduce the crash?

Updated by huanwen ren over 7 years ago

I met the same problem, ceph version is 10.2.2.0,

the client just simple copy file to the dir of ceph-fuse mounting

MDS crash as follows:

2016-09-05 14:22:33.180223 7faaa1748700 10 mds.beacon.cephfs103 _send up:reconnect seq 11 2016-09-05 14:22:33.181823 7faaa484f700 10 mds.beacon.cephfs103 handle_mds_beacon up:reconnect seq 11 rtt 0.001573 2016-09-05 14:22:36.474969 7faaa1f49700 15 mds.0.bal get_load mdsload<[0,0 0]/[0,0 0], req 0, hr 0, qlen 0, cpu 0.08> 2016-09-05 14:22:37.180300 7faaa1748700 10 mds.beacon.cephfs103 _send up:reconnect seq 12 2016-09-05 14:22:37.181933 7faaa484f700 10 mds.beacon.cephfs103 handle_mds_beacon up:reconnect seq 12 rtt 0.001606 2016-09-05 14:22:41.180401 7faaa1748700 10 mds.beacon.cephfs103 _send up:reconnect seq 13 2016-09-05 14:22:41.182000 7faaa484f700 10 mds.beacon.cephfs103 handle_mds_beacon up:reconnect seq 13 rtt 0.001564 2016-09-05 14:22:41.475036 7faaa1f49700 15 mds.0.bal get_load mdsload<[0,0 0]/[0,0 0], req 0, hr 0, qlen 0, cpu 0.16> 2016-09-05 14:22:45.180493 7faaa1748700 10 mds.beacon.cephfs103 _send up:reconnect seq 14 2016-09-05 14:22:45.182344 7faaa484f700 10 mds.beacon.cephfs103 handle_mds_beacon up:reconnect seq 14 rtt 0.001815 2016-09-05 14:22:46.475115 7faaa1f49700 15 mds.0.bal get_load mdsload<[0,0 0]/[0,0 0], req 0, hr 0, qlen 0, cpu 0.14> 2016-09-05 14:22:46.475198 7faaa1f49700 10 mds.0.server reconnect timed out 2016-09-05 14:22:46.475206 7faaa1f49700 1 mds.0.server reconnect gave up on client.2775535 100.100.100.102:0/1842504637 2016-09-05 14:22:46.475238 7faaa1f49700 10 mds.0.server kill_session 0x7faab4cfee00 2016-09-05 14:22:46.475251 7faaa1f49700 20 mds.0.sessionmap mark_projected s=0x7faab4cfee00 name=client.2775535 pv=110 -> 111 2016-09-05 14:22:46.475265 7faaa1f49700 10 mds.0.inotable: project_release_ids [10000014aee~230] to [100000003e9~fffffffc17]/[100000003e9~fffffffc17] 2016-09-05 14:22:46.479619 7faaa1f49700 -1 ./include/interval_set.h: In function 'void interval_set<T>::insert(T, T, T*, T*) [with T = inodeno_t]' thread 7faaa1f49700 time 2016-09-05 14:22:46.475291 ./include/interval_set.h: 355: FAILED assert(0) ceph version 10.2.2 (45107e21c568dd033c2f0a3107dec8f0b0e58374) 1: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x85) [0x7faaaaea0915] 2: (interval_set<inodeno_t>::insert(inodeno_t, inodeno_t, inodeno_t*, inodeno_t*)+0x34a) [0x7faaaacd9f8a] 3: (InoTable::project_release_ids(interval_set<inodeno_t>&)+0x1f5) [0x7faaaacd9215] 4: (Server::journal_close_session(Session*, int, Context*)+0x18b) [0x7faaaab01b0b] 5: (Server::kill_session(Session*, Context*)+0x12b) [0x7faaaab03bbb] 6: (Server::reconnect_tick()+0x150) [0x7faaaab054d0] 7: (MDSRankDispatcher::tick()+0x389) [0x7faaaaac9d89] 8: (Context::complete(int)+0x9) [0x7faaaaab7829] 9: (SafeTimer::timer_thread()+0x104) [0x7faaaae91124] 10: (SafeTimerThread::entry()+0xd) [0x7faaaae92b5d] 11: (()+0x7df3) [0x7faaa9c75df3] 12: (clone()+0x6d) [0x7faaa874254d] NOTE: a copy of the executable, or `objdump -rdS <executable>` is needed to interpret this. --- begin dump of recent events ---

Updated by John Spray over 7 years ago

Hmm, so the client session thinks it had something preallocated that the inotable thinks is already free.

I wonder if we have a bug in the journalling of preallocating inodes, or if we have some location where we're prematurely saving inotable.

huanwen: please can you provide an MDS log with debugging enabled from the point of startup to the point of crash?

Updated by huanwen ren over 7 years ago

- File ceph-mds.cephfs103.zip ceph-mds.cephfs103.zip added

@John Spray

add an complete MDS log, and set debug_mds = 30/30

Updated by John Spray over 7 years ago

- Priority changed from Normal to High

- Target version set to v12.0.0

Hmm, this one got lost somehow, targeting to Luminous so that it gets at least looked at.

Updated by c sights about 7 years ago

Hi All,

After upgrading to 10.2.6 on Debian Jessie, the MDS server fails to start. Below is what is written to the log file from attempted start to failure:

After turning up debugging the log is 3.8MB compressed, so I won't be able to upload that here.

Thanks!

C.

On 03/16/2017 12:48 PM, root@mds01.hep.wisc.edu wrote:

2017-03-16 12:46:38.063709 7f605e746180 0 set uid:gid to 64045:64045 (ceph:ceph)

2017-03-16 12:46:38.063825 7f605e746180 0 ceph version 10.2.6 (656b5b63ed7c43bd014bcafd81b001959d5f089f), process ceph-mds, pid 10858

2017-03-16 12:46:39.755982 7f6057b62700 1 mds.mds01.hep.wisc.edu handle_mds_map standby

2017-03-16 12:46:39.898430 7f6057b62700 1 mds.0.4072 handle_mds_map i am now mds.0.4072

2017-03-16 12:46:39.898437 7f6057b62700 1 mds.0.4072 handle_mds_map state change up:boot --> up:replay

2017-03-16 12:46:39.898459 7f6057b62700 1 mds.0.4072 replay_start

2017-03-16 12:46:39.898466 7f6057b62700 1 mds.0.4072 recovery set is

2017-03-16 12:46:39.898475 7f6057b62700 1 mds.0.4072 waiting for osdmap 253396 (which blacklists prior instance)

2017-03-16 12:46:40.227204 7f6052956700 0 mds.0.cache creating system inode with ino:100

2017-03-16 12:46:40.227569 7f6052956700 0 mds.0.cache creating system inode with ino:1

2017-03-16 12:46:40.954494 7f6050d48700 1 mds.0.4072 replay_done

2017-03-16 12:46:40.954526 7f6050d48700 1 mds.0.4072 making mds journal writeable

2017-03-16 12:46:42.211070 7f6057b62700 1 mds.0.4072 handle_mds_map i am now mds.0.4072

2017-03-16 12:46:42.211074 7f6057b62700 1 mds.0.4072 handle_mds_map state change up:replay --> up:reconnect

2017-03-16 12:46:42.211094 7f6057b62700 1 mds.0.4072 reconnect_start

2017-03-16 12:46:42.211098 7f6057b62700 1 mds.0.4072 reopen_log

2017-03-16 12:46:42.211105 7f6057b62700 1 mds.0.server reconnect_clients -- 5 sessions

2017-03-16 12:47:28.502417 7f605535d700 1 mds.0.server reconnect gave up on client.14384220 10.128.198.55:0/2012593454

2017-03-16 12:47:28.505126 7f605535d700 -1 ./include/interval_set.h: In function 'void interval_set<T>::insert(T, T, T*, T*) [with T = inodeno_t]' thread 7f605535d700 time 2017-03-16 12:47:28.502496

./include/interval_set.h: 355: FAILED assert(0)ceph version 10.2.6 (656b5b63ed7c43bd014bcafd81b001959d5f089f)

1: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x82) [0x7f605e248ed2]

2: (()+0x1ea5fe) [0x7f605de245fe]

3: (InoTable::project_release_ids(interval_set<inodeno_t>&)+0x917) [0x7f605e065ad7]

4: (Server::journal_close_session(Session*, int, Context*)+0x18e) [0x7f605de89f1e]

5: (Server::kill_session(Session*, Context*)+0x133) [0x7f605de8bf23]

6: (Server::reconnect_tick()+0x148) [0x7f605de8d378]

7: (MDSRankDispatcher::tick()+0x389) [0x7f605de524d9]

8: (Context::complete(int)+0x9) [0x7f605de3fcd9]

9: (SafeTimer::timer_thread()+0x104) [0x7f605e239e84]

10: (SafeTimerThread::entry()+0xd) [0x7f605e23ad2d]

11: (()+0x8064) [0x7f605d53d064]

12: (clone()+0x6d) [0x7f605ba8262d]

NOTE: a copy of the executable, or `objdump -rdS <executable>` is needed to interpret this.--- begin dump of recent events ---

263> 2017-03-16 12:46:38.056353 7f605e746180 5 asok(0x7f6068a2a000) register_command perfcounters_dump hook 0x7f6068a0603010.128.198.55:0/0 learned my addr 10.128.198.55:0/0

-262> 2017-03-16 12:46:38.056425 7f605e746180 5 asok(0x7f6068a2a000) register_command 1 hook 0x7f6068a06030

-261> 2017-03-16 12:46:38.056431 7f605e746180 5 asok(0x7f6068a2a000) register_command perf dump hook 0x7f6068a06030

-260> 2017-03-16 12:46:38.056434 7f605e746180 5 asok(0x7f6068a2a000) register_command perfcounters_schema hook 0x7f6068a06030

-259> 2017-03-16 12:46:38.056437 7f605e746180 5 asok(0x7f6068a2a000) register_command 2 hook 0x7f6068a06030

-258> 2017-03-16 12:46:38.056440 7f605e746180 5 asok(0x7f6068a2a000) register_command perf schema hook 0x7f6068a06030

-257> 2017-03-16 12:46:38.056444 7f605e746180 5 asok(0x7f6068a2a000) register_command perf reset hook 0x7f6068a06030

-256> 2017-03-16 12:46:38.056448 7f605e746180 5 asok(0x7f6068a2a000) register_command config show hook 0x7f6068a06030

-255> 2017-03-16 12:46:38.056457 7f605e746180 5 asok(0x7f6068a2a000) register_command config set hook 0x7f6068a06030

-254> 2017-03-16 12:46:38.056461 7f605e746180 5 asok(0x7f6068a2a000) register_command config get hook 0x7f6068a06030

-253> 2017-03-16 12:46:38.056464 7f605e746180 5 asok(0x7f6068a2a000) register_command config diff hook 0x7f6068a06030

-252> 2017-03-16 12:46:38.056466 7f605e746180 5 asok(0x7f6068a2a000) register_command log flush hook 0x7f6068a06030

-251> 2017-03-16 12:46:38.056469 7f605e746180 5 asok(0x7f6068a2a000) register_command log dump hook 0x7f6068a06030

-250> 2017-03-16 12:46:38.056472 7f605e746180 5 asok(0x7f6068a2a000) register_command log reopen hook 0x7f6068a06030

-249> 2017-03-16 12:46:38.063709 7f605e746180 0 set uid:gid to 64045:64045 (ceph:ceph)

-248> 2017-03-16 12:46:38.063825 7f605e746180 0 ceph version 10.2.6 (656b5b63ed7c43bd014bcafd81b001959d5f089f), process ceph-mds, pid 10858

-247> 2017-03-16 12:46:38.064580 7f605e746180 1 -246> 2017-03-16 12:46:38.064596 7f605e746180 1 accepter.accepter.bind my_inst.addr is 10.128.198.55:6800/2760248606 need_addr=010.128.198.55:6800/2760248606 messenger.start

-245> 2017-03-16 12:46:38.074400 7f605e746180 5 asok(0x7f6068a2a000) init /var/run/ceph/ceph-mds.mds01.hep.wisc.edu.asok

-244> 2017-03-16 12:46:38.074424 7f605e746180 5 asok(0x7f6068a2a000) bind_and_listen /var/run/ceph/ceph-mds.mds01.hep.wisc.edu.asok

-243> 2017-03-16 12:46:38.074459 7f605e746180 5 asok(0x7f6068a2a000) register_command 0 hook 0x7f6068a02090

-242> 2017-03-16 12:46:38.074468 7f605e746180 5 asok(0x7f6068a2a000) register_command version hook 0x7f6068a02090

-241> 2017-03-16 12:46:38.074472 7f605e746180 5 asok(0x7f6068a2a000) register_command git_version hook 0x7f6068a02090

-240> 2017-03-16 12:46:38.074478 7f605e746180 5 asok(0x7f6068a2a000) register_command help hook 0x7f6068a06100

-239> 2017-03-16 12:46:38.074482 7f605e746180 5 asok(0x7f6068a2a000) register_command get_command_descriptions hook 0x7f6068a06110

-238> 2017-03-16 12:46:38.074586 7f605e746180 10 monclient(hunting): build_initial_monmap

-237> 2017-03-16 12:46:38.074605 7f6059365700 5 asok(0x7f6068a2a000) entry start

-236> 2017-03-16 12:46:38.074670 7f605e746180 1 -235> 2017-03-16 12:46:38.074834 7f605e746180 5 adding auth protocol: cephx10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- auth(proto 0 43 bytes epoch 0) v1 -- ?+0 0x7f6068a22480 con 0x7f6068a44600

-234> 2017-03-16 12:46:38.074864 7f605e746180 5 adding auth protocol: cephx

-233> 2017-03-16 12:46:38.075023 7f605e746180 5 asok(0x7f6068a2a000) register_command objecter_requests hook 0x7f6068a06120

-232> 2017-03-16 12:46:38.075220 7f605e746180 1 accepter.accepter.start

-231> 2017-03-16 12:46:38.075323 7f605e746180 10 monclient(hunting): init

-230> 2017-03-16 12:46:38.075339 7f605e746180 5 adding auth protocol: cephx

-229> 2017-03-16 12:46:38.075342 7f605e746180 10 monclient(hunting): auth_supported 2 method cephx

-228> 2017-03-16 12:46:38.075725 7f605e746180 2 auth: KeyRing::load: loaded key file /var/lib/ceph/mds/ceph-mds01.hep.wisc.edu/keyring

-227> 2017-03-16 12:46:38.075883 7f605e746180 10 monclient(hunting): _reopen_session rank -1 name

-226> 2017-03-16 12:46:38.076015 7f605e746180 10 monclient(hunting): picked mon.noname-a con 0x7f6068a44600 addr 128.104.164.197:6789/0

-225> 2017-03-16 12:46:38.076112 7f605e746180 10 monclient(hunting): _send_mon_message to mon.noname-a at 128.104.164.197:6789/0

-224> 2017-03-16 12:46:38.076117 7f605e746180 1 -223> 2017-03-16 12:46:38.076149 7f605e746180 10 monclient(hunting): renew_subs10.128.198.55:6800/2760248606 >> 128.104.164.197:6789/0 pipe(0x7f6068aec000 sd=9 :48510 s=2 pgs=61333 cs=1 l=1 c=0x7f6068a44600).reader got KEEPALIVE_ACK

-222> 2017-03-16 12:46:38.082931 7f605dc36700 2 -221> 2017-03-16 12:46:38.287861 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 1 ==== mon_map magic: 0 v1 ==== 485+0+0 (3508460097 0 0) 0x7f6068a22900 con 0x7f6068a44600

-220> 2017-03-16 12:46:38.287927 7f6057b62700 10 monclient(hunting): handle_monmap mon_map magic: 0 v1

-219> 2017-03-16 12:46:38.287951 7f6057b62700 10 monclient(hunting): got monmap 9, mon.noname-a is now rank -1

-218> 2017-03-16 12:46:38.287955 7f6057b62700 10 monclient(hunting): dump:

epoch 9

fsid 7797e50e-f4b3-42f6-8454-2e2b19fa41d6

last_changed 2017-02-25 08:34:26.853796

created 0.000000

0: 10.128.198.51:6789/0 mon.mon03

1: 128.104.164.197:6789/0 mon.mon01

2: 128.104.164.198:6789/0 mon.mon02

217> 2017-03-16 12:46:38.288001 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 2 ==== auth_reply(proto 2 0 (0) Success) v1 ==== 33+0+0 (1352899243 0 0) 0x7f6068a22b40 con 0x7f6068a44600216> 2017-03-16 12:46:38.288062 7f6057b62700 10 monclient(hunting): my global_id is 1455311210.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- auth(proto 2 32 bytes epoch 0) v1 -- ?+0 0x7f6068a22900 con 0x7f6068a44600

-215> 2017-03-16 12:46:38.288242 7f6057b62700 10 monclient(hunting): _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-214> 2017-03-16 12:46:38.288262 7f6057b62700 1 -213> 2017-03-16 12:46:38.494342 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 3 ==== auth_reply(proto 2 0 (0) Success) v1 ==== 222+0+0 (899327842 0 0) 0x7f6068a22d80 con 0x7f6068a44600212> 2017-03-16 12:46:38.494567 7f6057b62700 10 monclient(hunting): _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- auth(proto 2 181 bytes epoch 0) v1 -- ?+0 0x7f6068a22b40 con 0x7f6068a44600

-211> 2017-03-16 12:46:38.494575 7f6057b62700 1 -210> 2017-03-16 12:46:38.495693 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 4 ==== auth_reply(proto 2 0 (0) Success) v1 ==== 628+0+0 (1712823250 0 0) 0x7f6068a22480 con 0x7f6068a44600209> 2017-03-16 12:46:38.496194 7f6057b62700 1 monclient(hunting): found mon.mon0110.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mon_subscribe({monmap=0+}) v2 -- ?+0 0x7f6068a1f000 con 0x7f6068a44600

-208> 2017-03-16 12:46:38.496198 7f6057b62700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-207> 2017-03-16 12:46:38.496205 7f6057b62700 1 -206> 2017-03-16 12:46:38.496258 7f6057b62700 10 monclient: _check_auth_rotating renewing rotating keys (they expired before 2017-03-16 12:46:08.496256)10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- auth(proto 2 2 bytes epoch 0) v1 -- ?+0 0x7f6068a22d80 con 0x7f6068a44600

-205> 2017-03-16 12:46:38.496272 7f6057b62700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-204> 2017-03-16 12:46:38.496276 7f6057b62700 1 -203> 2017-03-16 12:46:38.496493 7f605e746180 5 monclient: authenticate success, global_id 1455311210.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 5 ==== mon_map magic: 0 v1 ==== 485+0+0 (3508460097 0 0) 0x7f6068a22900 con 0x7f6068a44600

-202> 2017-03-16 12:46:38.496527 7f605e746180 10 monclient: wait_auth_rotating waiting (until 2017-03-16 12:47:08.496524)

-201> 2017-03-16 12:46:38.497223 7f6057b62700 1 -

-200> 2017-03-16 12:46:38.497291 7f6057b62700 10 monclient: handle_monmap mon_map magic: 0 v1

-199> 2017-03-16 12:46:38.497314 7f6057b62700 10 monclient: got monmap 9, mon.mon01 is now rank 1

-198> 2017-03-16 12:46:38.497322 7f6057b62700 10 monclient: dump:

epoch 9

fsid 7797e50e-f4b3-42f6-8454-2e2b19fa41d6

last_changed 2017-02-25 08:34:26.853796

created 0.000000

0: 10.128.198.51:6789/0 mon.mon03

1: 128.104.164.197:6789/0 mon.mon01

2: 128.104.164.198:6789/0 mon.mon02

197> 2017-03-16 12:46:38.497383 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 6 ==== auth_reply(proto 2 0 (0) Success) v1 ==== 194+0+0 (4216734023 0 0) 0x7f6068a23200 con 0x7f6068a44600196> 2017-03-16 12:46:38.497586 7f6057b62700 10 monclient: _check_auth_rotating have uptodate secrets (they expire after 2017-03-16 12:46:08.497585)10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mon_subscribe({osdmap=0}) v2 -- ?+0 0x7f6068a1f200 con 0x7f6068a44600

-195> 2017-03-16 12:46:38.497642 7f605e746180 10 monclient: wait_auth_rotating done

-194> 2017-03-16 12:46:38.497729 7f605e746180 10 monclient: renew_subs

-193> 2017-03-16 12:46:38.497739 7f605e746180 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-192> 2017-03-16 12:46:38.497751 7f605e746180 1 -191> 2017-03-16 12:46:38.497791 7f605e746180 10 monclient: renew_subs10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mon_subscribe({mdsmap=0+}) v2 -- ?+0 0x7f6068a1f400 con 0x7f6068a44600

-190> 2017-03-16 12:46:38.497798 7f605e746180 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-189> 2017-03-16 12:46:38.497803 7f605e746180 1 -188> 2017-03-16 12:46:38.499470 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 7 ==== osd_map(253395..253395 src has 252783..253395) v3 ==== 23526+0+0 (1120173083 0 0) 0x7f6068a23440 con 0x7f6068a44600187> 2017-03-16 12:46:38.499908 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 8 ==== mdsmap(e 4070) v1 ==== 430+0+0 (812630953 0 0) 0x7f6068a238c0 con 0x7f6068a44600186> 2017-03-16 12:46:38.499930 7f6057b62700 5 mds.mds01.hep.wisc.edu handle_mds_map epoch 4070 from mon.110.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:boot seq 1 v4070) v7 -- ?+0 0x7f6068b0c000 con 0x7f6068a44600

-185> 2017-03-16 12:46:38.499955 7f605e746180 5 asok(0x7f6068a2a000) register_command status hook 0x7f6068a063a0

-184> 2017-03-16 12:46:38.499973 7f605e746180 5 asok(0x7f6068a2a000) register_command dump_ops_in_flight hook 0x7f6068a063a0

-183> 2017-03-16 12:46:38.499978 7f605e746180 5 asok(0x7f6068a2a000) register_command ops hook 0x7f6068a063a0

-182> 2017-03-16 12:46:38.499983 7f605e746180 5 asok(0x7f6068a2a000) register_command dump_blocked_ops hook 0x7f6068a063a0

-181> 2017-03-16 12:46:38.499988 7f605e746180 5 asok(0x7f6068a2a000) register_command dump_historic_ops hook 0x7f6068a063a0

-180> 2017-03-16 12:46:38.499994 7f605e746180 5 asok(0x7f6068a2a000) register_command scrub_path hook 0x7f6068a063a0

-179> 2017-03-16 12:46:38.500002 7f605e746180 5 asok(0x7f6068a2a000) register_command tag path hook 0x7f6068a063a0

-178> 2017-03-16 12:46:38.500006 7f605e746180 5 asok(0x7f6068a2a000) register_command flush_path hook 0x7f6068a063a0

-177> 2017-03-16 12:46:38.500010 7f605e746180 5 asok(0x7f6068a2a000) register_command export dir hook 0x7f6068a063a0

-176> 2017-03-16 12:46:38.500015 7f605e746180 5 asok(0x7f6068a2a000) register_command dump cache hook 0x7f6068a063a0

-175> 2017-03-16 12:46:38.500022 7f605e746180 5 asok(0x7f6068a2a000) register_command session evict hook 0x7f6068a063a0

-174> 2017-03-16 12:46:38.500026 7f605e746180 5 asok(0x7f6068a2a000) register_command osdmap barrier hook 0x7f6068a063a0

-173> 2017-03-16 12:46:38.500031 7f605e746180 5 asok(0x7f6068a2a000) register_command session ls hook 0x7f6068a063a0

-172> 2017-03-16 12:46:38.500039 7f605e746180 5 asok(0x7f6068a2a000) register_command flush journal hook 0x7f6068a063a0

-171> 2017-03-16 12:46:38.500044 7f605e746180 5 asok(0x7f6068a2a000) register_command force_readonly hook 0x7f6068a063a0

-170> 2017-03-16 12:46:38.500048 7f605e746180 5 asok(0x7f6068a2a000) register_command get subtrees hook 0x7f6068a063a0

-169> 2017-03-16 12:46:38.500052 7f605e746180 5 asok(0x7f6068a2a000) register_command dirfrag split hook 0x7f6068a063a0

-168> 2017-03-16 12:46:38.500057 7f605e746180 5 asok(0x7f6068a2a000) register_command dirfrag merge hook 0x7f6068a063a0

-167> 2017-03-16 12:46:38.500061 7f605e746180 5 asok(0x7f6068a2a000) register_command dirfrag ls hook 0x7f6068a063a0

-166> 2017-03-16 12:46:38.500964 7f605e746180 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-165> 2017-03-16 12:46:38.500987 7f605e746180 1 -164> 2017-03-16 12:46:39.755903 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 9 ==== mdsmap(e 4071) v1 ==== 663+0+0 (405444012 0 0) 0x7f6068a23b00 con 0x7f6068a44600163> 2017-03-16 12:46:39.755931 7f6057b62700 5 mds.mds01.hep.wisc.edu handle_mds_map epoch 4071 from mon.110.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 10 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:boot seq 1 v4071) v7 ==== 147+0+0 (608848311 0 0) 0x7f6068b0c340 con 0x7f6068a44600

-162> 2017-03-16 12:46:39.755982 7f6057b62700 1 mds.mds01.hep.wisc.edu handle_mds_map standby

-161> 2017-03-16 12:46:39.756647 7f6057b62700 1 -160> 2017-03-16 12:46:39.897557 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 11 ==== mdsmap(e 4072) v1 ==== 695+0+0 (3840706189 0 0) 0x7f6068a24400 con 0x7f6068a44600159> 2017-03-16 12:46:39.897638 7f6057b62700 5 mds.mds01.hep.wisc.edu handle_mds_map epoch 4072 from mon.110.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mon_subscribe({osdmap=253396}) v2 -- ?+0 0x7f6068a20400 con 0x7f6068a44600

-158> 2017-03-16 12:46:39.897923 7f6057b62700 10 log_channel(cluster) update_config to_monitors: true to_syslog: false syslog_facility: daemon prio: info to_graylog: false graylog_host: 127.0.0.1 graylog_port: 12201)

-157> 2017-03-16 12:46:39.898069 7f6057b62700 10 monclient: renew_subs

-156> 2017-03-16 12:46:39.898076 7f6057b62700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-155> 2017-03-16 12:46:39.898083 7f6057b62700 1 -154> 2017-03-16 12:46:39.898430 7f6057b62700 1 mds.0.4072 handle_mds_map i am now mds.0.4072> up:replay

-153> 2017-03-16 12:46:39.898437 7f6057b62700 1 mds.0.4072 handle_mds_map state change up:boot -152> 2017-03-16 12:46:39.898459 7f6057b62700 1 mds.0.4072 replay_start10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 12 ==== osd_map(253396..253396 src has 252783..253396) v3 ==== 358+0+0 (3782450987 0 0) 0x7f6068a24640 con 0x7f6068a44600

-151> 2017-03-16 12:46:39.898466 7f6057b62700 1 mds.0.4072 recovery set is

-150> 2017-03-16 12:46:39.898475 7f6057b62700 1 mds.0.4072 waiting for osdmap 253396 (which blacklists prior instance)

-149> 2017-03-16 12:46:39.899404 7f6057b62700 1 -148> 2017-03-16 12:46:39.899478 7f6057b62700 10 monclient: renew_subs10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mon_subscribe({osdmap=253397}) v2 -- ?+0 0x7f6068a20600 con 0x7f6068a44600

-147> 2017-03-16 12:46:39.899484 7f6057b62700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-146> 2017-03-16 12:46:39.899489 7f6057b62700 1 -145> 2017-03-16 12:46:39.899536 7f6053359700 2 mds.0.4072 boot_start 0: opening inotable10.128.198.55:6800/2760248606 --> 128.104.164.186:6802/9213 -- osd_op(mds.0.4072:1 28.b852b893 mds0_inotable [read 0~0] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad2b00 con 0x7f6068a44a80

-144> 2017-03-16 12:46:39.899855 7f6053359700 1 -143> 2017-03-16 12:46:39.900093 7f6053359700 2 mds.0.4072 boot_start 0: opening sessionmap10.128.198.55:6800/2760248606 --> 128.104.164.186:6800/9205 -- osd_op(mds.0.4072:2 28.3270c60b mds0_sessionmap [omap-get-header 0~0,omap-get-vals 0~16] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad2dc0 con 0x7f6068a44c00

-142> 2017-03-16 12:46:39.900236 7f6053359700 1 -141> 2017-03-16 12:46:39.900285 7f6053359700 2 mds.0.4072 boot_start 0: opening mds log10.128.198.55:6800/2760248606 --> 128.104.164.192:6801/815 -- osd_op(mds.0.4072:3 28.64e96f8f 400.00000000 [read 0~0] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad3340 con 0x7f6068a44f00

-140> 2017-03-16 12:46:39.900291 7f6053359700 5 mds.0.log open discovering log bounds

-139> 2017-03-16 12:46:39.900658 7f6052956700 4 mds.0.journalpointer Reading journal pointer '400.00000000'

-138> 2017-03-16 12:46:39.900843 7f6052956700 1 -137> 2017-03-16 12:46:39.903362 7f6053359700 2 mds.0.4072 boot_start 0: opening snap table10.128.198.55:6800/2760248606 --> 128.104.164.185:6807/26476 -- osd_op(mds.0.4072:4 28.d90270ad mds_snaptable [read 0~0] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad3080 con 0x7f6068a45080

-136> 2017-03-16 12:46:39.903706 7f6053359700 1 -135> 2017-03-16 12:46:39.906465 7f6051651700 1 -10.128.198.55:6800/2760248606 <== osd.9 128.104.164.186:6802/9213 1 ==== osd_op_reply(1 mds0_inotable [read 0~34] v0'0 uv9245 ondisk = 0) v7 ==== 133+0+34 (956867083 0 3472406751) 0x7f6068ad3600 con 0x7f6068a44a80134> 2017-03-16 12:46:39.906909 7f6051752700 1 -10.128.198.55:6800/2760248606 <== osd.23 128.104.164.186:6800/9205 1 ==== osd_op_reply(2 mds0_sessionmap [omap-get-header 0~0,omap-get-vals 0~16] v0'0 uv10562 ondisk = 0) v7 ==== 177+0+2686 (2978668618 0 671940003) 0x7f6068ad38c0 con 0x7f6068a44c00133> 2017-03-16 12:46:39.907373 7f605144f700 1 -10.128.198.55:6800/2760248606 <== osd.20 128.104.164.185:6807/26476 1 ==== osd_op_reply(4 mds_snaptable [read 0~46] v0'0 uv3930 ondisk = 0) v7 ==== 133+0+46 (835979099 0 2603725198) 0x7f6068ad3b80 con 0x7f6068a45080132> 2017-03-16 12:46:40.221832 7f6051550700 1 -10.128.198.55:6800/2760248606 <== osd.25 128.104.164.192:6801/815 1 ==== osd_op_reply(3 400.00000000 [read 0~22] v0'0 uv4652 ondisk = 0) v7 ==== 132+0+22 (2747070255 0 3825830296) 0x7f6068ad3e40 con 0x7f6068a44f00131> 2017-03-16 12:46:40.221965 7f6052956700 1 mds.0.journaler(ro) recover start10.128.198.55:6800/2760248606 --> 128.104.164.192:6801/815 -- osd_op(mds.0.4072:5 28.844f3494 200.00000000 [read 0~0] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad4100 con 0x7f6068a44f00

-130> 2017-03-16 12:46:40.221976 7f6052956700 1 mds.0.journaler(ro) read_head

-129> 2017-03-16 12:46:40.222026 7f6052956700 1 -128> 2017-03-16 12:46:40.222053 7f6052956700 4 mds.0.log Waiting for journal 200 to recover...10.128.198.55:6800/2760248606 <== osd.25 128.104.164.192:6801/815 2 ==== osd_op_reply(5 200.00000000 [read 0~90] v0'0 uv231416 ondisk = 0) v7 ==== 132+0+90 (1095256138 0 4044846266) 0x7f6068ad3e40 con 0x7f6068a44f00

-127> 2017-03-16 12:46:40.222963 7f6051550700 1 -126> 2017-03-16 12:46:40.223187 7f6053359700 1 mds.0.journaler(ro) _finish_read_head loghead(trim 57529073664, expire 57531493616, write 57568900321, stream_format 1). probing for end of log (from 57568900321)...10.128.198.55:6800/2760248606 --> 128.104.164.190:6806/12128 -- osd_op(mds.0.4072:6 28.36d3fd3b 200.0000359d [stat] snapc 0=[] ack+read+rwordered+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad3340 con 0x7f6068a45200

-125> 2017-03-16 12:46:40.223212 7f6053359700 1 mds.0.journaler(ro) probing for end of the log

-124> 2017-03-16 12:46:40.223452 7f6053359700 1 -123> 2017-03-16 12:46:40.223625 7f6053359700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.187:6801/32677 -- osd_op(mds.0.4072:7 28.4abefdb4 200.0000359e [stat] snapc 0=[] ack+read+rwordered+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad4100 con 0x7f6068a45380122> 2017-03-16 12:46:40.226838 7f605114c700 1 -10.128.198.55:6800/2760248606 <== osd.12 128.104.164.190:6806/12128 1 ==== osd_op_reply(6 200.0000359d [stat] v0'0 uv3950 ondisk = 0) v7 ==== 132+0+16 (2993314783 0 1154058251) 0x7f6068ad4940 con 0x7f6068a45200121> 2017-03-16 12:46:40.227043 7f605104b700 1 -10.128.198.55:6800/2760248606 <== osd.21 128.104.164.187:6801/32677 1 ==== osd_op_reply(7 200.0000359e [stat] v0'0 uv0 ack =2 ((2) No such file or directory)) v7 ==== 132+0+0 (148609258 0 0) 0x7f6068ad4c00 con 0x7f6068a4538010.128.198.55:6800/2760248606 --> 128.104.164.192:6801/815 -- osd_op(mds.0.4072:8 28.c5265ab3 100.00000000 [getxattr inode] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad43c0 con 0x7f6068a44f00

-120> 2017-03-16 12:46:40.227106 7f6053359700 1 mds.0.journaler(ro) _finish_probe_end write_pos = 57568900539 (header had 57568900321). recovered.

-119> 2017-03-16 12:46:40.227145 7f6052956700 4 mds.0.log Journal 200 recovered.

-118> 2017-03-16 12:46:40.227162 7f6052956700 4 mds.0.log Recovered journal 200 in format 1

-117> 2017-03-16 12:46:40.227171 7f6052956700 2 mds.0.4072 boot_start 1: loading/discovering base inodes

-116> 2017-03-16 12:46:40.227204 7f6052956700 0 mds.0.cache creating system inode with ino:100

-115> 2017-03-16 12:46:40.227465 7f6052956700 1 -114> 2017-03-16 12:46:40.227521 7f6052956700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.190:6806/12128 -- osd_op(mds.0.4072:9 28.85dde07f 100.00000000.inode [read 0~0] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad4ec0 con 0x7f6068a45200113> 2017-03-16 12:46:40.227569 7f6052956700 0 mds.0.cache creating system inode with ino:110.128.198.55:6800/2760248606 --> 128.104.164.187:6801/32677 -- osd_op(mds.0.4072:10 28.6b2cdaff 1.00000000 [getxattr inode] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad5180 con 0x7f6068a45380

-112> 2017-03-16 12:46:40.227652 7f6052956700 1 -111> 2017-03-16 12:46:40.228191 7f6052956700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.191:6800/7604 -- osd_op(mds.0.4072:11 28.232c0e14 1.00000000.inode [read 0~0] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad5440 con 0x7f6068a45f80110> 2017-03-16 12:46:40.228658 7f605114c700 1 -10.128.198.55:6800/2760248606 <== osd.12 128.104.164.190:6806/12128 2 ==== osd_op_reply(9 100.00000000.inode [read 0~508] v0'0 uv8955 ondisk = 0) v7 ==== 138+0+508 (3440302411 0 2388339745) 0x7f6068ad4940 con 0x7f6068a45200109> 2017-03-16 12:46:40.228662 7f6051550700 1 -10.128.198.55:6800/2760248606 <== osd.25 128.104.164.192:6801/815 3 ==== osd_op_reply(8 100.00000000 [getxattr] v0'0 uv0 ondisk =61 ((61) No data available)) v7 ==== 132+0+0 (1918403652 0 0) 0x7f6068ad3e40 con 0x7f6068a44f0010.128.198.55:6800/2760248606 <== osd.21 128.104.164.187:6801/32677 2 ==== osd_op_reply(10 1.00000000 [getxattr] v0'0 uv0 ondisk =

-108> 2017-03-16 12:46:40.229217 7f605104b700 1 -61 ((61) No data available)) v7 ==== 130+0+0 (561679232 0 0) 0x7f6068ad4c00 con 0x7f6068a4538010.128.198.55:6800/2760248606 <== osd.16 128.104.164.191:6800/7604 1 ==== osd_op_reply(11 1.00000000.inode [read 0~508] v0'0 uv4078 ondisk = 0) v7 ==== 136+0+508 (259529730 0 1388926158) 0x7f6068ad4100 con 0x7f6068a45f80

-107> 2017-03-16 12:46:40.231418 7f6050e49700 1 -106> 2017-03-16 12:46:40.231490 7f6053359700 2 mds.0.4072 boot_start 2: replaying mds log10.128.198.55:6800/2760248606 --> 128.104.164.185:6801/26441 -- osd_op(mds.0.4072:12 28.acedf1f2 200.00003594 [read 2419952~1774352 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad5180 con 0x7f6068a46280

-105> 2017-03-16 12:46:40.232256 7f6050d48700 1 -104> 2017-03-16 12:46:40.232395 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.191:6806/7641 -- osd_op(mds.0.4072:13 28.bc52f0f0 200.00003595 [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad3340 con 0x7f6068a46400103> 2017-03-16 12:46:40.232477 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.192:6801/815 -- osd_op(mds.0.4072:14 28.1b1cf1ea 200.00003596 [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad4ec0 con 0x7f6068a44f00102> 2017-03-16 12:46:40.232607 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.186:6800/9205 -- osd_op(mds.0.4072:15 28.df0696c3 200.00003597 [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad5700 con 0x7f6068a44c00101> 2017-03-16 12:46:40.232808 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.194:6802/12224 -- osd_op(mds.0.4072:16 28.e3c81a72 200.00003598 [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad59c0 con 0x7f6068a46580100> 2017-03-16 12:46:40.232866 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.187:6801/32677 -- osd_op(mds.0.4072:17 28.6c8e88ed 200.00003599 [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad5c80 con 0x7f6068a4538099> 2017-03-16 12:46:40.232980 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.187:6807/32732 -- osd_op(mds.0.4072:18 28.a2debcd4 200.0000359a [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad2dc0 con 0x7f6068a4670098> 2017-03-16 12:46:40.233060 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.185:6801/26441 -- osd_op(mds.0.4072:19 28.6ae4bc20 200.0000359b [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068ad5700 con 0x7f6068a4628097> 2017-03-16 12:46:40.233294 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.193:6803/10073 -- osd_op(mds.0.4072:20 28.22a6d216 200.0000359c [read 0~4194304 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8a000 con 0x7f6068a4688096> 2017-03-16 12:46:40.233347 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.190:6806/12128 -- osd_op(mds.0.4072:21 28.36d3fd3b 200.0000359d [read 0~2078139 [fadvise_dontneed]] snapc 0=[] ack+read+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8a2c0 con 0x7f6068a4520095> 2017-03-16 12:46:40.351727 7f605104b700 1 -10.128.198.55:6800/2760248606 <== osd.21 128.104.164.187:6801/32677 3 ==== osd_op_reply(17 200.00003599 [read 0~4194304 [fadvise_dontneed]] v0'0 uv6094 ondisk = 0) v7 ==== 132+0+4194304 (2713510152 0 127130748) 0x7f6068ad4c00 con 0x7f6068a4538094> 2017-03-16 12:46:40.353137 7f604fd3f700 1 -10.128.198.55:6800/2760248606 <== osd.15 128.104.164.187:6807/32732 1 ==== osd_op_reply(18 200.0000359a [read 0~4194304 [fadvise_dontneed]] v0'0 uv4747 ondisk = 0) v7 ==== 132+0+4194304 (171467895 0 1705686165) 0x7f6068ad5180 con 0x7f6068a4670093> 2017-03-16 12:46:40.407565 7f605114c700 1 -10.128.198.55:6800/2760248606 <== osd.12 128.104.164.190:6806/12128 3 ==== osd_op_reply(21 200.0000359d [read 0~2078139 [fadvise_dontneed]] v0'0 uv3950 ondisk = 0) v7 ==== 132+0+2078139 (4180118324 0 4228852073) 0x7f6068ad4940 con 0x7f6068a4520092> 2017-03-16 12:46:40.489320 7f604fe40700 1 -10.128.198.55:6800/2760248606 <== osd.19 128.104.164.194:6802/12224 1 ==== osd_op_reply(16 200.00003598 [read 0~4194304 [fadvise_dontneed]] v0'0 uv7429 ondisk = 0) v7 ==== 132+0+4194304 (2668985927 0 1760461299) 0x7f6068ad5700 con 0x7f6068a4658091> 2017-03-16 12:46:40.506571 7f604fc3e700 1 -10.128.198.55:6800/2760248606 <== osd.4 128.104.164.193:6803/10073 1 ==== osd_op_reply(20 200.0000359c [read 0~4194304 [fadvise_dontneed]] v0'0 uv5298 ondisk = 0) v7 ==== 132+0+4194304 (973477155 0 1861055686) 0x7f6068b8adc0 con 0x7f6068a4688090> 2017-03-16 12:46:40.535609 7f604ff41700 1 -10.128.198.55:6800/2760248606 <== osd.7 128.104.164.191:6806/7641 1 ==== osd_op_reply(13 200.00003595 [read 0~4194304 [fadvise_dontneed]] v0'0 uv5655 ondisk = 0) v7 ==== 132+0+4194304 (1823834050 0 3173147619) 0x7f6068b8b080 con 0x7f6068a4640089> 2017-03-16 12:46:40.537282 7f6051752700 1 -10.128.198.55:6800/2760248606 <== osd.23 128.104.164.186:6800/9205 2 ==== osd_op_reply(15 200.00003597 [read 0~4194304 [fadvise_dontneed]] v0'0 uv5126 ondisk = 0) v7 ==== 132+0+4194304 (1307230259 0 3496773095) 0x7f6068ad38c0 con 0x7f6068a44c0088> 2017-03-16 12:46:40.543758 7f6050042700 1 -10.128.198.55:6800/2760248606 <== osd.24 128.104.164.185:6801/26441 1 ==== osd_op_reply(19 200.0000359b [read 0~4194304 [fadvise_dontneed]] v0'0 uv3614 ondisk = 0) v7 ==== 132+0+4194304 (2858076625 0 2111017190) 0x7f6068b8b340 con 0x7f6068a4628087> 2017-03-16 12:46:40.572638 7f6050042700 1 -10.128.198.55:6800/2760248606 <== osd.24 128.104.164.185:6801/26441 2 ==== osd_op_reply(12 200.00003594 [read 2419952~1774352 [fadvise_dontneed]] v0'0 uv3895 ondisk = 0) v7 ==== 132+0+1774352 (3541855445 0 94634870) 0x7f6068b8b340 con 0x7f6068a4628086> 2017-03-16 12:46:40.579099 7f6051550700 1 -10.128.198.55:6800/2760248606 <== osd.25 128.104.164.192:6801/815 4 ==== osd_op_reply(14 200.00003596 [read 0~4194304 [fadvise_dontneed]] v0'0 uv4366 ondisk = 0) v7 ==== 132+0+4194304 (845072142 0 465387616) 0x7f6068ad3e40 con 0x7f6068a44f0085> 2017-03-16 12:46:40.954494 7f6050d48700 1 mds.0.4072 replay_done10.128.198.55:6800/2760248606 --> 128.104.164.190:6806/12128 -- osd_op(mds.0.4072:22 28.36d3fd3b 200.0000359d [zero 2078139~2116165] snapc 0=[] ondisk+write+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8a840 con 0x7f6068a45200

-84> 2017-03-16 12:46:40.954526 7f6050d48700 1 mds.0.4072 making mds journal writeable

-83> 2017-03-16 12:46:40.954529 7f6050d48700 1 mds.0.journaler(ro) set_writeable

-82> 2017-03-16 12:46:40.954644 7f6050d48700 1 -81> 2017-03-16 12:46:40.954901 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.187:6801/32677 -- osd_op(mds.0.4072:23 28.4abefdb4 200.0000359e [delete] snapc 0=[] ondisk+write+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8ab00 con 0x7f6068a4538080> 2017-03-16 12:46:40.955140 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.185:6804/26446 -- osd_op(mds.0.4072:24 28.a08aa029 200.0000359f [delete] snapc 0=[] ondisk+write+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8a580 con 0x7f6068a46a0079> 2017-03-16 12:46:40.955176 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.191:6806/7641 -- osd_op(mds.0.4072:25 28.ba5e12ec 200.000035a0 [delete] snapc 0=[] ondisk+write+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8b600 con 0x7f6068a4640078> 2017-03-16 12:46:40.955212 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.185:6804/26446 -- osd_op(mds.0.4072:26 28.55fd185f 200.000035a1 [delete] snapc 0=[] ondisk+write+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8b8c0 con 0x7f6068a46a0077> 2017-03-16 12:46:40.955574 7f6050d48700 1 -10.128.198.55:6800/2760248606 --> 128.104.164.187:6807/32732 -- osd_op(mds.0.4072:27 28.b95c282d 200.000035a2 [delete] snapc 0=[] ondisk+write+known_if_redirected+full_force e253396) v7 -- ?+0 0x7f6068b8bb80 con 0x7f6068a4670076> 2017-03-16 12:46:40.955648 7f6050d48700 2 mds.0.4072 i am alone, moving to state reconnect10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 2 v4072) v7 -- ?+0 0x7f6068b0cd00 con 0x7f6068a44600

-75> 2017-03-16 12:46:40.955666 7f6050d48700 3 mds.0.4072 request_state up:reconnect

-74> 2017-03-16 12:46:40.955695 7f6050d48700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-73> 2017-03-16 12:46:40.955720 7f6050d48700 1 -72> 2017-03-16 12:46:40.956038 7f605114c700 1 -10.128.198.55:6800/2760248606 <== osd.12 128.104.164.190:6806/12128 4 ==== osd_op_reply(22 200.0000359d [zero 2078139~2116165] v0'0 uv3950 ondisk = 0) v7 ==== 132+0+0 (283401261 0 0) 0x7f6068ad4940 con 0x7f6068a4520071> 2017-03-16 12:46:40.956073 7f605104b700 1 -10.128.198.55:6800/2760248606 <== osd.21 128.104.164.187:6801/32677 4 ==== osd_op_reply(23 200.0000359e [delete] v0'0 uv6891 ondisk =2 ((2) No such file or directory)) v7 ==== 132+0+0 (682401803 0 0) 0x7f6068ad4c00 con 0x7f6068a4538010.128.198.55:6800/2760248606 <== osd.7 128.104.164.191:6806/7641 2 ==== osd_op_reply(25 200.000035a0 [delete] v0'0 uv2604 ondisk =

-70> 2017-03-16 12:46:40.956404 7f604ff41700 1 -2 ((2) No such file or directory)) v7 ==== 132+0+0 (2720394826 0 0) 0x7f6068b8b080 con 0x7f6068a4640010.128.198.55:6800/2760248606 <== osd.15 128.104.164.187:6807/32732 2 ==== osd_op_reply(27 200.000035a2 [delete] v0'0 uv5770 ondisk =

-69> 2017-03-16 12:46:40.956855 7f604fd3f700 1 -2 ((2) No such file or directory)) v7 ==== 132+0+0 (3112330756 0 0) 0x7f6068ad5180 con 0x7f6068a4670010.128.198.55:6800/2760248606 <== osd.17 128.104.164.185:6804/26446 1 ==== osd_op_reply(24 200.0000359f [delete] v0'0 uv6786 ondisk =

-68> 2017-03-16 12:46:40.958577 7f604fa3c700 1 -2 ((2) No such file or directory)) v7 ==== 132+0+0 (4221788746 0 0) 0x7f6068ad2dc0 con 0x7f6068a46a0010.128.198.55:6800/2760248606 <== osd.17 128.104.164.185:6804/26446 2 ==== osd_op_reply(26 200.000035a1 [delete] v0'0 uv4463 ondisk =

-67> 2017-03-16 12:46:40.958640 7f604fa3c700 1 -2 ((2) No such file or directory)) v7 ==== 132+0+0 (826999402 0 0) 0x7f6068ad2dc0 con 0x7f6068a46a0010.128.198.55:6800/2760248606 >> 128.104.164.197:6789/0 pipe(0x7f6068aec000 sd=9 :48510 s=2 pgs=61333 cs=1 l=1 c=0x7f6068a44600).reader got KEEPALIVE_ACK

-66> 2017-03-16 12:46:41.076034 7f605635f700 10 monclient: tick

-65> 2017-03-16 12:46:41.076053 7f605635f700 10 monclient: _check_auth_rotating have uptodate secrets (they expire after 2017-03-16 12:46:11.076050)

-64> 2017-03-16 12:46:41.076796 7f605dc36700 2 -63> 2017-03-16 12:46:42.210989 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 13 ==== mdsmap(e 4073) v1 ==== 695+0+0 (2687855982 0 0) 0x7f6068a24880 con 0x7f6068a4460062> 2017-03-16 12:46:42.211018 7f6057b62700 5 mds.mds01.hep.wisc.edu handle_mds_map epoch 4073 from mon.1> up:reconnect

-61> 2017-03-16 12:46:42.211070 7f6057b62700 1 mds.0.4072 handle_mds_map i am now mds.0.4072

-60> 2017-03-16 12:46:42.211074 7f6057b62700 1 mds.0.4072 handle_mds_map state change up:replay -59> 2017-03-16 12:46:42.211094 7f6057b62700 1 mds.0.4072 reconnect_start5 sessions

-58> 2017-03-16 12:46:42.211098 7f6057b62700 1 mds.0.4072 reopen_log

-57> 2017-03-16 12:46:42.211105 7f6057b62700 1 mds.0.server reconnect_clients -56> 2017-03-16 12:46:42.211167 7f6057b62700 5 mds.0.bal rebalance done10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 14 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 2 v4073) v7 ==== 143+0+0 (3934735392 0 0) 0x7f6068b0c680 con 0x7f6068a44600

-55> 2017-03-16 12:46:42.212269 7f6057b62700 1 -54> 2017-03-16 12:46:44.955944 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 3 v4073) v7 -- ?+0 0x7f6068b0c000 con 0x7f6068a44600

-53> 2017-03-16 12:46:44.955991 7f6054b5c700 1 -52> 2017-03-16 12:46:45.004781 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 15 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 3 v4073) v7 ==== 143+0+0 (1599022310 0 0) 0x7f6068b0c9c0 con 0x7f6068a4460051> 2017-03-16 12:46:48.956072 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 4 v4073) v7 -- ?+0 0x7f6068b0cd00 con 0x7f6068a44600

-50> 2017-03-16 12:46:48.956104 7f6054b5c700 1 -49> 2017-03-16 12:46:48.958146 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 16 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 4 v4073) v7 ==== 143+0+0 (1476568487 0 0) 0x7f6068b0d040 con 0x7f6068a4460048> 2017-03-16 12:46:50.845713 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 17 ==== osd_map(253397..253397 src has 252783..253397) v3 ==== 350+0+0 (3798802921 0 0) 0x7f6068a24ac0 con 0x7f6068a4460047> 2017-03-16 12:46:50.845838 7f6057b62700 10 monclient: renew_subs10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mon_subscribe({osdmap=253398}) v2 -- ?+0 0x7f6068a20800 con 0x7f6068a44600

-46> 2017-03-16 12:46:50.845849 7f6057b62700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-45> 2017-03-16 12:46:50.845856 7f6057b62700 1 -44> 2017-03-16 12:46:51.076197 7f605635f700 10 monclient: tick10.128.198.55:6800/2760248606 >> 128.104.164.197:6789/0 pipe(0x7f6068aec000 sd=9 :48510 s=2 pgs=61333 cs=1 l=1 c=0x7f6068a44600).reader got KEEPALIVE_ACK

-43> 2017-03-16 12:46:51.076216 7f605635f700 10 monclient: _check_auth_rotating have uptodate secrets (they expire after 2017-03-16 12:46:21.076213)

-42> 2017-03-16 12:46:51.076822 7f605dc36700 2 -41> 2017-03-16 12:46:52.956207 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 5 v4073) v7 -- ?+0 0x7f6068b0d380 con 0x7f6068a44600

-40> 2017-03-16 12:46:52.956246 7f6054b5c700 1 -39> 2017-03-16 12:46:52.958300 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 18 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 5 v4073) v7 ==== 143+0+0 (3989491041 0 0) 0x7f6068b0c000 con 0x7f6068a4460038> 2017-03-16 12:46:56.956339 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 6 v4073) v7 -- ?+0 0x7f6068b0da00 con 0x7f6068a44600

-37> 2017-03-16 12:46:56.956372 7f6054b5c700 1 -36> 2017-03-16 12:46:56.958551 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 19 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 6 v4073) v7 ==== 143+0+0 (914227930 0 0) 0x7f6068b0cd00 con 0x7f6068a4460035> 2017-03-16 12:47:00.956468 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 7 v4073) v7 -- ?+0 0x7f6068b0dd40 con 0x7f6068a44600

-34> 2017-03-16 12:47:00.956502 7f6054b5c700 1 -33> 2017-03-16 12:47:00.958619 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 20 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 7 v4073) v7 ==== 143+0+0 (2209770012 0 0) 0x7f6068b0d380 con 0x7f6068a4460032> 2017-03-16 12:47:01.076380 7f605635f700 10 monclient: tick10.128.198.55:6800/2760248606 >> 128.104.164.197:6789/0 pipe(0x7f6068aec000 sd=9 :48510 s=2 pgs=61333 cs=1 l=1 c=0x7f6068a44600).reader got KEEPALIVE_ACK

-31> 2017-03-16 12:47:01.076426 7f605635f700 10 monclient: _check_auth_rotating have uptodate secrets (they expire after 2017-03-16 12:46:31.076422)

-30> 2017-03-16 12:47:01.077219 7f605dc36700 2 -29> 2017-03-16 12:47:04.956599 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 8 v4073) v7 -- ?+0 0x7f6068b0e080 con 0x7f6068a44600

-28> 2017-03-16 12:47:04.956633 7f6054b5c700 1 -27> 2017-03-16 12:47:04.958709 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 21 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 8 v4073) v7 ==== 143+0+0 (954531928 0 0) 0x7f6068b0d6c0 con 0x7f6068a4460026> 2017-03-16 12:47:08.956735 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 9 v4073) v7 -- ?+0 0x7f6068b0e3c0 con 0x7f6068a44600

-25> 2017-03-16 12:47:08.956771 7f6054b5c700 1 -24> 2017-03-16 12:47:08.958866 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 22 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 9 v4073) v7 ==== 143+0+0 (2368563358 0 0) 0x7f6068b0da00 con 0x7f6068a4460023> 2017-03-16 12:47:11.076587 7f605635f700 10 monclient: tick10.128.198.55:6800/2760248606 >> 128.104.164.197:6789/0 pipe(0x7f6068aec000 sd=9 :48510 s=2 pgs=61333 cs=1 l=1 c=0x7f6068a44600).reader got KEEPALIVE_ACK

-22> 2017-03-16 12:47:11.076623 7f605635f700 10 monclient: _check_auth_rotating have uptodate secrets (they expire after 2017-03-16 12:46:41.076619)

-21> 2017-03-16 12:47:11.077317 7f605dc36700 2 -20> 2017-03-16 12:47:12.956849 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 10 v4073) v7 -- ?+0 0x7f6068b0e700 con 0x7f6068a44600

-19> 2017-03-16 12:47:12.956873 7f6054b5c700 1 -18> 2017-03-16 12:47:12.959075 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 23 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 10 v4073) v7 ==== 143+0+0 (1452910373 0 0) 0x7f6068b0dd40 con 0x7f6068a4460017> 2017-03-16 12:47:15.975474 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 24 ==== osd_map(253398..253398 src has 252783..253398) v3 ==== 350+0+0 (3392048459 0 0) 0x7f6068a24d00 con 0x7f6068a4460016> 2017-03-16 12:47:15.975604 7f6057b62700 10 monclient: renew_subs10.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mon_subscribe({osdmap=253399}) v2 -- ?+0 0x7f6068a20a00 con 0x7f6068a44600

-15> 2017-03-16 12:47:15.975610 7f6057b62700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/0

-14> 2017-03-16 12:47:15.975615 7f6057b62700 1 -13> 2017-03-16 12:47:16.956998 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 11 v4073) v7 -- ?+0 0x7f6068b0ea40 con 0x7f6068a44600

-12> 2017-03-16 12:47:16.957034 7f6054b5c700 1 -11> 2017-03-16 12:47:17.193643 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 25 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 11 v4073) v7 ==== 143+0+0 (3813789667 0 0) 0x7f6068b0e080 con 0x7f6068a4460010> 2017-03-16 12:47:20.957128 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 12 v4073) v7 -- ?+0 0x7f6068b0ed80 con 0x7f6068a44600

-9> 2017-03-16 12:47:20.957160 7f6054b5c700 1 -8> 2017-03-16 12:47:21.056637 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 26 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 12 v4073) v7 ==== 143+0+0 (3827060386 0 0) 0x7f6068b0e3c0 con 0x7f6068a446007> 2017-03-16 12:47:21.076758 7f605635f700 10 monclient: tick10.128.198.55:6800/2760248606 >> 128.104.164.197:6789/0 pipe(0x7f6068aec000 sd=9 :48510 s=2 pgs=61333 cs=1 l=1 c=0x7f6068a44600).reader got KEEPALIVE_ACK

-6> 2017-03-16 12:47:21.076780 7f605635f700 10 monclient: _check_auth_rotating have uptodate secrets (they expire after 2017-03-16 12:46:51.076777)

-5> 2017-03-16 12:47:21.077443 7f605dc36700 2 -4> 2017-03-16 12:47:24.957262 7f6054b5c700 10 monclient: _send_mon_message to mon.mon01 at 128.104.164.197:6789/010.128.198.55:6800/2760248606 --> 128.104.164.197:6789/0 -- mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 13 v4073) v7 -- ?+0 0x7f6068b0f400 con 0x7f6068a44600

-3> 2017-03-16 12:47:24.957295 7f6054b5c700 1 -2> 2017-03-16 12:47:25.066120 7f6057b62700 1 -10.128.198.55:6800/2760248606 <== mon.1 128.104.164.197:6789/0 27 ==== mdsbeacon(14553112/mds01.hep.wisc.edu up:reconnect seq 13 v4073) v7 ==== 143+0+0 (1372857956 0 0) 0x7f6068b0f0c0 con 0x7f6068a44600

-1> 2017-03-16 12:47:28.502417 7f605535d700 1 mds.0.server reconnect gave up on client.14384220 10.128.198.55:0/2012593454

0> 2017-03-16 12:47:28.505126 7f605535d700 -1 ./include/interval_set.h: In function 'void interval_set<T>::insert(T, T, T*, T*) [with T = inodeno_t]' thread 7f605535d700 time 2017-03-16 12:47:28.502496

./include/interval_set.h: 355: FAILED assert(0)ceph version 10.2.6 (656b5b63ed7c43bd014bcafd81b001959d5f089f)

1: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x82) [0x7f605e248ed2]

2: (()+0x1ea5fe) [0x7f605de245fe]

3: (InoTable::project_release_ids(interval_set<inodeno_t>&)+0x917) [0x7f605e065ad7]

4: (Server::journal_close_session(Session*, int, Context*)+0x18e) [0x7f605de89f1e]

5: (Server::kill_session(Session*, Context*)+0x133) [0x7f605de8bf23]

6: (Server::reconnect_tick()+0x148) [0x7f605de8d378]

7: (MDSRankDispatcher::tick()+0x389) [0x7f605de524d9]

8: (Context::complete(int)+0x9) [0x7f605de3fcd9]

9: (SafeTimer::timer_thread()+0x104) [0x7f605e239e84]

10: (SafeTimerThread::entry()+0xd) [0x7f605e23ad2d]

11: (()+0x8064) [0x7f605d53d064]

12: (clone()+0x6d) [0x7f605ba8262d]

NOTE: a copy of the executable, or `objdump -rdS <executable>` is needed to interpret this.--- logging levels ---

0/ 5 none

0/ 1 lockdep

0/ 1 context

1/ 1 crush

1/ 5 mds

1/ 5 mds_balancer

1/ 5 mds_locker

1/ 5 mds_log

1/ 5 mds_log_expire

1/ 5 mds_migrator

0/ 1 buffer

0/ 1 timer

0/ 1 filer

0/ 1 striper

0/ 1 objecter

0/ 5 rados

0/ 5 rbd

0/ 5 rbd_mirror

0/ 5 rbd_replay

0/ 5 journaler

0/ 5 objectcacher

0/ 5 client

0/ 5 osd

0/ 5 optracker

0/ 5 objclass

1/ 3 filestore

1/ 3 journal

0/ 5 ms

1/ 5 mon

0/10 monc

1/ 5 paxos

0/ 5 tp

1/ 5 auth

1/ 5 crypto

1/ 1 finisher

1/ 5 heartbeatmap

1/ 5 perfcounter

1/ 5 rgw

1/10 civetweb

1/ 5 javaclient

1/ 5 asok

1/ 1 throttle

0/ 0 refs

1/ 5 xio

1/ 5 compressor

1/ 5 newstore

1/ 5 bluestore

1/ 5 bluefs

1/ 3 bdev

1/ 5 kstore

4/ 5 rocksdb

4/ 5 leveldb

1/ 5 kinetic

1/ 5 fuse2/-2 (syslog threshold)end dump of recent events ---

-1/-1 (stderr threshold)

max_recent 10000

max_new 1000

log_file /var/log/ceph/ceph-mds.mds01.hep.wisc.edu.log

--

2017-03-16 12:47:28.509676 7f605535d700 -1 ** Caught signal (Aborted) *

in thread 7f605535d700 thread_name:safe_timerceph version 10.2.6 (656b5b63ed7c43bd014bcafd81b001959d5f089f)

1: (()+0x505637) [0x7f605e13f637]

2: (()+0xf890) [0x7f605d544890]

3: (gsignal()+0x37) [0x7f605b9cf067]

4: (abort()+0x148) [0x7f605b9d0448]

5: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x256) [0x7f605e2490a6]

6: (()+0x1ea5fe) [0x7f605de245fe]

7: (InoTable::project_release_ids(interval_set<inodeno_t>&)+0x917) [0x7f605e065ad7]

8: (Server::journal_close_session(Session*, int, Context*)+0x18e) [0x7f605de89f1e]

9: (Server::kill_session(Session*, Context*)+0x133) [0x7f605de8bf23]

10: (Server::reconnect_tick()+0x148) [0x7f605de8d378]

11: (MDSRankDispatcher::tick()+0x389) [0x7f605de524d9]

12: (Context::complete(int)+0x9) [0x7f605de3fcd9]

13: (SafeTimer::timer_thread()+0x104) [0x7f605e239e84]

14: (SafeTimerThread::entry()+0xd) [0x7f605e23ad2d]

15: (()+0x8064) [0x7f605d53d064]

16: (clone()+0x6d) [0x7f605ba8262d]

NOTE: a copy of the executable, or `objdump -rdS <executable>` is needed to interpret this.--- begin dump of recent events ---

0> 2017-03-16 12:47:28.509676 7f605535d700 -1 ** Caught signal (Aborted) *

in thread 7f605535d700 thread_name:safe_timerceph version 10.2.6 (656b5b63ed7c43bd014bcafd81b001959d5f089f)

1: (()+0x505637) [0x7f605e13f637]

2: (()+0xf890) [0x7f605d544890]

3: (gsignal()+0x37) [0x7f605b9cf067]

4: (abort()+0x148) [0x7f605b9d0448]

5: (ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x256) [0x7f605e2490a6]

6: (()+0x1ea5fe) [0x7f605de245fe]

7: (InoTable::project_release_ids(interval_set<inodeno_t>&)+0x917) [0x7f605e065ad7]

8: (Server::journal_close_session(Session*, int, Context*)+0x18e) [0x7f605de89f1e]

9: (Server::kill_session(Session*, Context*)+0x133) [0x7f605de8bf23]

10: (Server::reconnect_tick()+0x148) [0x7f605de8d378]

11: (MDSRankDispatcher::tick()+0x389) [0x7f605de524d9]

12: (Context::complete(int)+0x9) [0x7f605de3fcd9]

13: (SafeTimer::timer_thread()+0x104) [0x7f605e239e84]

14: (SafeTimerThread::entry()+0xd) [0x7f605e23ad2d]

15: (()+0x8064) [0x7f605d53d064]

16: (clone()+0x6d) [0x7f605ba8262d]

NOTE: a copy of the executable, or `objdump -rdS <executable>` is needed to interpret this.--- logging levels ---

0/ 5 none

0/ 1 lockdep

0/ 1 context

1/ 1 crush

1/ 5 mds

1/ 5 mds_balancer

1/ 5 mds_locker

1/ 5 mds_log

1/ 5 mds_log_expire

1/ 5 mds_migrator

0/ 1 buffer

0/ 1 timer

0/ 1 filer

0/ 1 striper

0/ 1 objecter

0/ 5 rados

0/ 5 rbd

0/ 5 rbd_mirror

0/ 5 rbd_replay

0/ 5 journaler

0/ 5 objectcacher

0/ 5 client

0/ 5 osd

0/ 5 optracker

0/ 5 objclass

1/ 3 filestore

1/ 3 journal

0/ 5 ms

1/ 5 mon

0/10 monc

1/ 5 paxos

0/ 5 tp

1/ 5 auth

1/ 5 crypto

1/ 1 finisher

1/ 5 heartbeatmap

1/ 5 perfcounter

1/ 5 rgw

1/10 civetweb

1/ 5 javaclient

1/ 5 asok

1/ 1 throttle

0/ 0 refs

1/ 5 xio

1/ 5 compressor

1/ 5 newstore

1/ 5 bluestore

1/ 5 bluefs

1/ 3 bdev

1/ 5 kstore

4/ 5 rocksdb

4/ 5 leveldb

1/ 5 kinetic

1/ 5 fuse2/-2 (syslog threshold)end dump of recent events ---

-1/-1 (stderr threshold)

max_recent 10000

max_new 1000

log_file /var/log/ceph/ceph-mds.mds01.hep.wisc.edu.log

--

Updated by John Spray about 7 years ago

I don't have any brilliant ideas for debugging this (can't go back in time to find out how those bogus inode ranges made it into the session map), but I do think we should fix this to validate the inode ranges in the sessions after replay, and remove any sessions that look wrong (while firing off some loud complaints to the cluster log)

Updated by c sights about 7 years ago

If anyone needs the debug level "30/30" dump I can send it directly to them.

Also, so far I have not nuked the cephfs which does not allow the mds to start. John, do you think I should nuke and start over or wait to test a new release?

Thanks!

C.

Updated by John Spray about 7 years ago

A patch that should allow MDSs to throw out the invalid sessions and start up. Does not fix whatever got the system into this state to begin with, but in general we don't want MDSs to die completely when they have something invalid on disk like this.

Updated by John Spray about 7 years ago

Created ticket for the workaround and marked it for backport here: http://tracker.ceph.com/issues/19708

I should have created that before really so that I could reference it from the commit, but oh well.

Updated by Patrick Donnelly about 6 years ago

- Subject changed from Replacement MDS crashes on InoTable release to mds: replacement MDS crashes on InoTable release

- Assignee set to Patrick Donnelly

- Target version changed from v12.0.0 to v13.0.0

- Backport set to jewel,luminous

Updated by Zheng Yan almost 6 years ago

make we should mark this as "need more info" or "can't reproduce"

Updated by Patrick Donnelly almost 6 years ago

- Status changed from New to Can't reproduce

Updated by Ivan Guan over 5 years ago

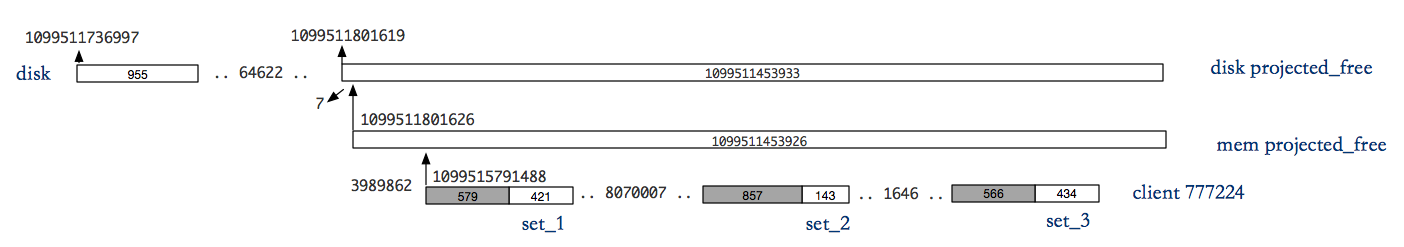

- File inode-free-prealloc.png inode-free-prealloc.png added

I think the patch https://github.com/ceph/ceph/pull/14164 can't resolve this bug completely.For example my situation:

ceph version: jewel 10.2.2

2018-10-26 15:53:01.089865 7fa0ecbe9700 1 mds.0.server reconnect gave up on client.777224 192.168.1.172:0/3315197400 //表明从client.777224 这里除了问题

2018-10-26 15:53:01.089869 7fa0ecbe9700 1 mds.0.server peng kill_session

2018-10-26 15:53:01.410457 7fa0ecbe9700 -1 ./include/interval_set.h: In function 'void interval_set<T>::insert(T, T, T*, T*) [with T = inodeno_t]' thread 7fa0ecbe9700 time 2018-10-26 15:53:01.089877

./include/interval_set.h: 355: FAILED assert(0)

(gdb) bt

#0 0x00007fe983a6ffcb in raise () from /lib64/libpthread.so.0

#1 0x00007fe984b9a4a5 in reraise_fatal (signum=6) at global/signal_handler.cc:71

#2 handle_fatal_signal (signum=6) at global/signal_handler.cc:133

#3 <signal handler called>

#4 0x00007fe9824745f7 in raise () from /lib64/libc.so.6

#5 0x00007fe982475ce8 in abort () from /lib64/libc.so.6

#6 0x00007fe984c952b7 in ceph::__ceph_assert_fail (assertion=assertion@entry=0x7fe984ebfcd1 "0",

file=file@entry=0x7fe984e1dcb6 "./include/interval_set.h", line=line@entry=355,

func=func@entry=0x7fe984e43cc0 <_ZZN12interval_setI9inodeno_tE6insertES0_S0_PS0_S2_E19__PRETTY_FUNCTION__> "void interval_set<T>::insert(T, T, T*, T*) [with T = inodeno_t]") at common/assert.cc:78

#7 0x00007fe984aba82a in interval_set<inodeno_t>::insert (this=0x7fe98f85a830, start=..., len=..., pstart=0x0, plen=0x0)

at include/interval_set.h:355

#8 0x00007fe984ab9ac5 in insert (a=..., this=0x7fe98f85a830) at include/interval_set.h:439

#9 InoTable::project_release_ids (this=0x7fe98f85a780, ids=...) at mds/InoTable.cc:87

#10 0x00007fe9848df58f in Server::journal_close_session (this=this@entry=0x7fe98f817c00, session=session@entry=0x7fe98fabe700,

state=state@entry=5, on_safe=on_safe@entry=0x0) at mds/Server.cc:749

#11 0x00007fe9848e1a61 in Server::kill_session (this=this@entry=0x7fe98f817c00, session=session@entry=0x7fe98fabe700,

on_safe=on_safe@entry=0x0) at mds/Server.cc:723

#12 0x00007fe9848e3580 in Server::reconnect_tick (this=0x7fe98f817c00) at mds/Server.cc:937

#13 0x00007fe98489ec39 in MDSRankDispatcher::tick (this=0x7fe98f8d8600) at mds/MDSRank.cc:200

#14 0x00007fe98488c0f9 in Context::complete (this=0x7fe98f7ec3f0, r=<optimized out>) at include/Context.h:64

#15 0x00007fe984c858e4 in SafeTimer::timer_thread (this=0x7fe98f87c078) at common/Timer.cc:105

#16 0x00007fe984c8731d in SafeTimerThread::entry (this=<optimized out>) at common/Timer.cc:38

#17 0x00007fe983a68dc5 in start_thread () from /lib64/libpthread.so.0

#18 0x00007fe98253528d in clone () from /lib64/libc.so.6

p projected_free

$1 = {_size = 1099511454347, m = std::map with 1 elements = {[{val = 1099511801626}] = {val = 1099511453926}

1000002a71a fffffd58e6

[1000002a71a~fffffd58e6]

p ids

$2 = (interval_set<inodeno_t> &) @0x7fe97bf4ac90: {_size = 998, m = std::map with 3 elements =

{[{val = 1099515791488}] = {val = 421}, 100003f8880 ~ 421

[{val = 1099523861495}] = {val = 143}, 10000baabf7 ~ 143

[{val = 1099523863141}] = {val = 434}}} 10000bab265 ~ 434

cephfs-table-tool all show inode

{

"0": {

"data": {

"version": 348,

"inotable": {

"projected_free": [

{

"start": 1099511736997, 1000001aaa5

"len": 995 3e3

},

{

"start": 1099511801619, 1000002a713

"len": 1099511453933 fffffd58ed

}

],

"free": [

{

"start": 1099511736997,

"len": 995

},

{

"start": 1099511801619,

"len": 1099511453933

}

]

}

},

"result": 0

}

}

cephfs-table-tool all show session —cluster xtao

{

"entity name": {

"type": "client",

"num": 777224

},

"version": 168324,

"state": "open",

"Session info": {

"inst": "client.777224 192.168.1.172:0\/3315197400",

"completed_requests": [],

"prealloc_inos": [

{

"start": 1099515791488,

"length": 421

},

{

"start": 1099523861495,

"length": 143

},

{

"start": 1099523863141,

"length": 434

}

],

"used_inos": [],

"ceph_sha1": "d005c4599f9a5897ac0d789b100f3bc34570ff9a",

"ceph_version": "ceph version ummit3.0-beta1-46-gd005c45 (d005c4599f9a5897ac0d789b100f3bc34570ff9a)",

"entity_id": "admin",

"hostname": "xtao_anna2",

"mount_point": "\/mnt\/sss110",

"root": "\/"

}

}

According the patch will delete the intersection of prealloc_inos and projected_free from session info.But thus will lead inode reuse, so we should delete all inodes less than set_3 including it from projected free in function validate_session to prevent that from happening.

Updated by Ivan Guan over 5 years ago

https://github.com/ceph/ceph/pull/24942 can resolve this problem