Actions

Bug #62681

closedhigh virtual memory consumption when dealing with Chunked Upload

% Done:

100%

Source:

Community (user)

Tags:

aws-chunked backport_processed

Backport:

pacific quincy reef

Regression:

No

Severity:

3 - minor

Reviewed:

Description

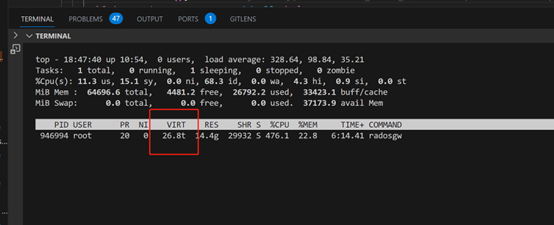

use minIO warp to bench the RGW and I observed that the virtual memory grew to the TB-level. warp use Chunked Upload, each of 64kb is signed.

2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 HTTP_HOST=127.0.0.1:8000 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 HTTP_USER_AGENT=MinIO (linux; amd64) minio-go/v7.0.31 warp/0.6.3 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 HTTP_VERSION=1.1 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 HTTP_X_AMZ_CONTENT_SHA256=STREAMING-AWS4-HMAC-SHA256-PAYLOAD 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 HTTP_X_AMZ_DATE=20230903T192246Z 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 HTTP_X_AMZ_DECODED_CONTENT_LENGTH=26214400 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 REMOTE_ADDR=127.0.0.1 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 REQUEST_METHOD=PUT 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 REQUEST_URI=/warp-multi-write-100m/xe3Ybzg8/10.t4AUUb1LC3qQV%28qN.rnd 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 SCRIPT_URI=/warp-multi-write-100m/xe3Ybzg8/10.t4AUUb1LC3qQV%28qN.rnd 2023-09-03T15:22:46.545-0400 7f4fe9e93700 20 SERVER_PORT=8000 2023-09-03T15:22:46.545-0400 7f4fe9e93700 1 ====== starting new request req=0x7f51cc433730 =====

warp put --host=127.0.0.1:8000 --bucket=warp-multi-write-100m --access-key=0555b35654ad1656d804 --secret-key=h7GhxuBLTrlhVUyxSPUKUV8r/2EI4ngqJxD7iBdBYLhwluN30JaT3Q== --obj.size=1MiB --duration=1m --concurrent=128 --benchdata=200M-multi-write -q --disable-multipart

rgw perf dump shows buffer_anon_bytes is about 2TB

"bluefs_file_writer_items": 0,

"buffer_anon_bytes": 2926080809009,

"buffer_anon_items": 21865,

"buffer_meta_bytes": 1918928,

"buffer_meta_items": 21806,

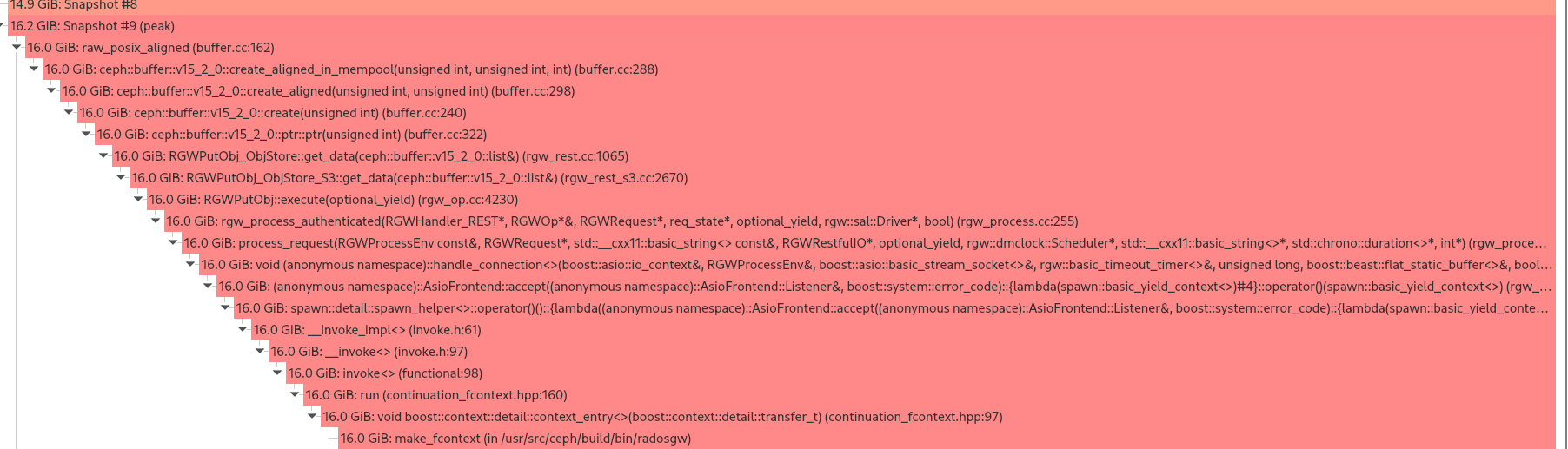

Another run with valgrind shows the majority of the memory is occupied by buffer::ptr allocated by RGWPutObj_ObjStore::get_data(bufferlist& bl)

Files

Updated by Casey Bodley 8 months ago

- Status changed from New to Fix Under Review

- Tags set to aws-chunked

- Pull request ID set to 53266

Updated by Casey Bodley 7 months ago

- Status changed from Fix Under Review to Pending Backport

- Backport set to pacific quincy reef

Updated by Backport Bot 7 months ago

- Copied to Backport #63055: pacific: high virtual memory consumption when dealing with Chunked Upload added

Updated by Backport Bot 7 months ago

- Copied to Backport #63056: quincy: high virtual memory consumption when dealing with Chunked Upload added

Updated by Backport Bot 7 months ago

- Copied to Backport #63057: reef: high virtual memory consumption when dealing with Chunked Upload added

Updated by Backport Bot 7 months ago

- Tags changed from aws-chunked to aws-chunked backport_processed

Updated by Konstantin Shalygin 6 months ago

- Status changed from Pending Backport to Resolved

- Target version set to v19.0.0

- % Done changed from 0 to 100

- Source set to Community (user)

Actions